Purpose of the article:

The purpose is to discuss Cloud Composer that orchestrates the overall data pipeline in GCP’s cloud environment using Standard python scripts inside DAGs.

Intended Audience:

Everyone

Tools and Technology:

Tool-Cloud Composer

Technology- Google cloud platform

Keywords:

Cloud Composer, Apache Airflow, GCP DAG

Introduction:

This article intended to explain building a directed acyclic graph in a google cloud environment using a component called Cloud Composer. It is a managed Apache Airflow service that helps you create, monitor, schedule, and manage workflows. Cloud Composer automation uses Airflow-native tools like Airflow web interface, command-line tools, and workflows and not infrastructure. Directed Acyclic Graph is a set of tasks that you intended to run. DAGs defined in Python files placed in Airflow’s DAG_FOLDER will be created automatically with the composer environment. Cloud Composer helps in Comprehensive GCP integration that Orchestrates the entire GCP pipeline through cloud composer, Hybrid, and multi-cloud environments to Connect your pipeline and break it down through a single orchestration service. Easy workflow orchestration to Get moving quickly by coding your workflows using python, Open source, and Ensure freedom from lock-in through Apache Airflow’s open-source portability. You create a Cloud Composer environment within a project. Each workflow is a managed deployment of Apache Airflow Secured with IAM.

Discussed Features:

• Creating Cloud Composer environment and then building DAG in Airflow interface

• Trigger built DAG that will Load Data into Google BigQuery from GCS bucket, performs all the tasks mentioned in python code and sends DAG updates to Gmail.

Building DAG:

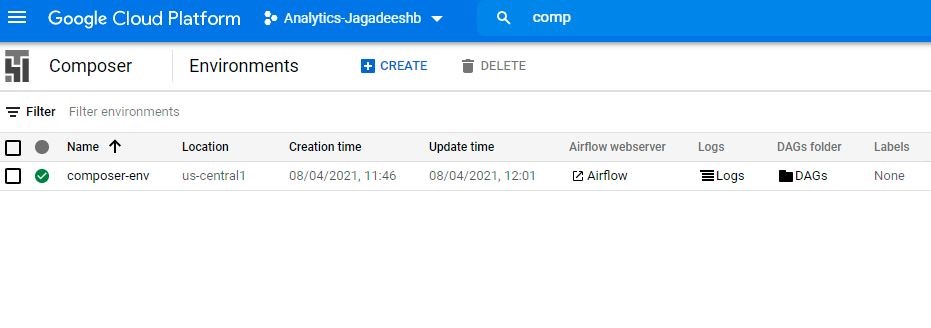

Step-1: In the Cloud Console, navigate to Cloud composer service and create an environment.

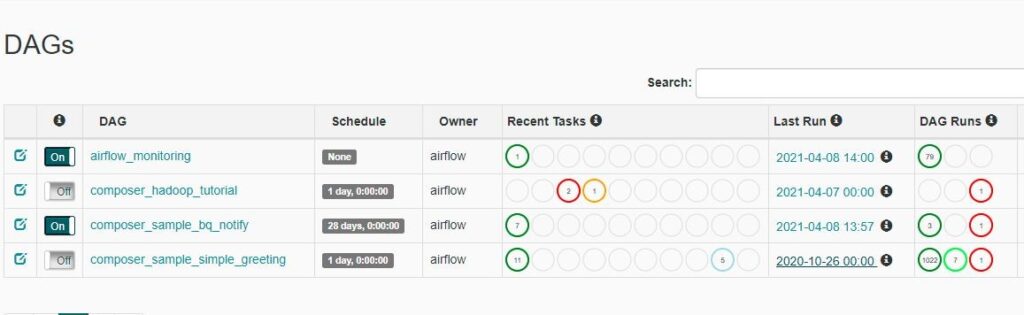

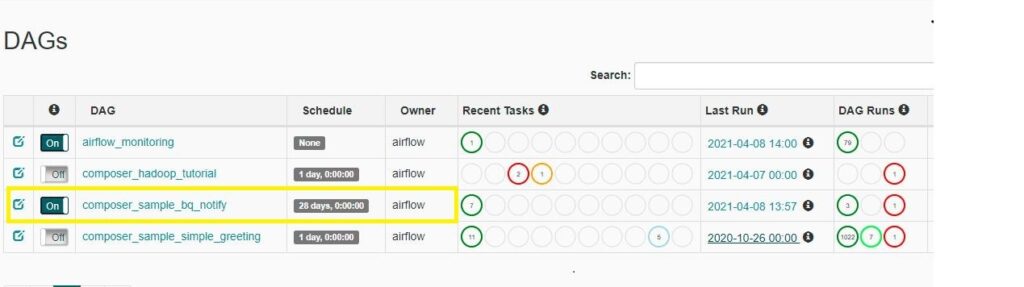

Step-2: On creating the environment, click on Airflow in the above capture to redirect to the Airflow interface, where you can see your entire created DAGs list.

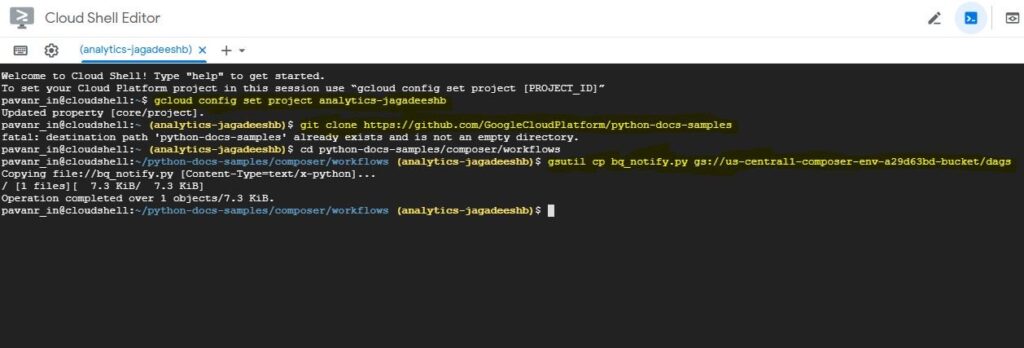

Step-3: Now go to Cloud Console; click the Activate Cloud Shell button in the top right toolbar.

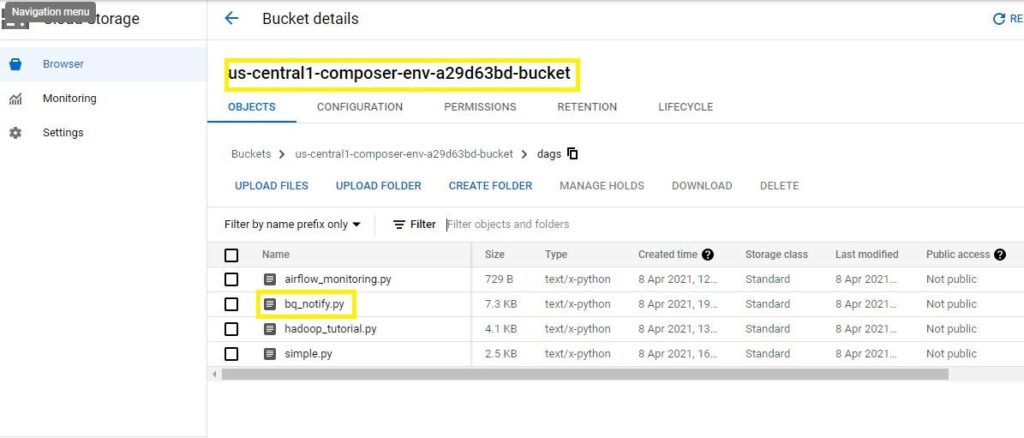

Step-4: Initially, when you create a composer environment, a bucket will be automatically created where your DAG_Folder resides.

Step-5: Now, clone your python code into CLI and copy your .py file into DAG_Folder using the cp command.

- gcloud config set project analytics-jagadeeshb.

- git clone https://github.com/GoogleCloudPlatform/python-docs-samples

- cd python-docs-samples/composer/workflows

- gsutil cp bq_notify.py gs://us-central1-composer-env-a29d63bd-bucket/dags

Step-6: Creating the DAG; Click on it from the DAG list.

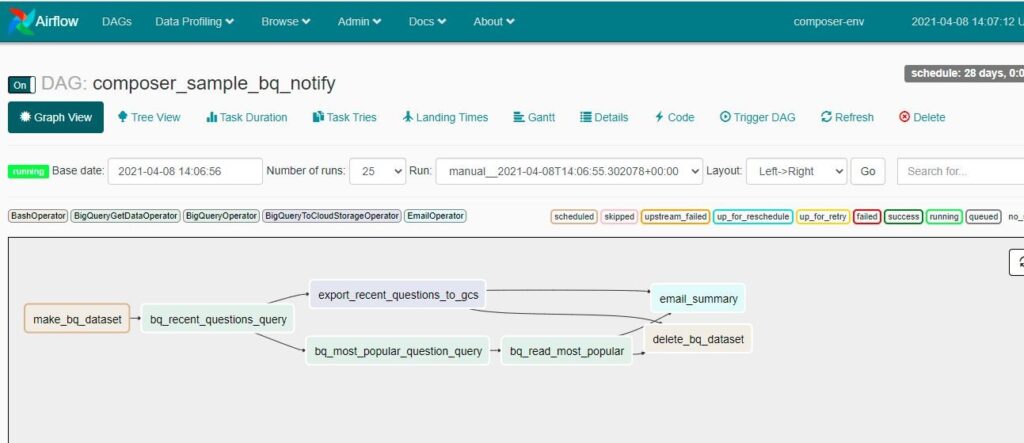

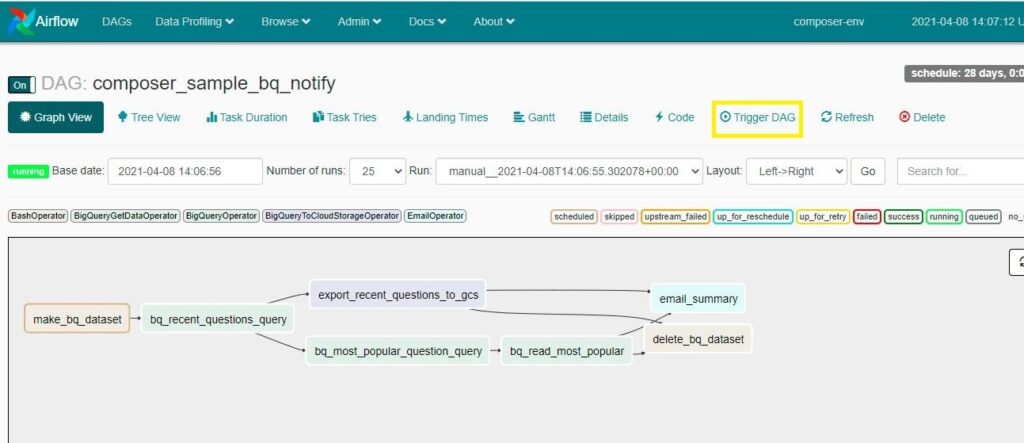

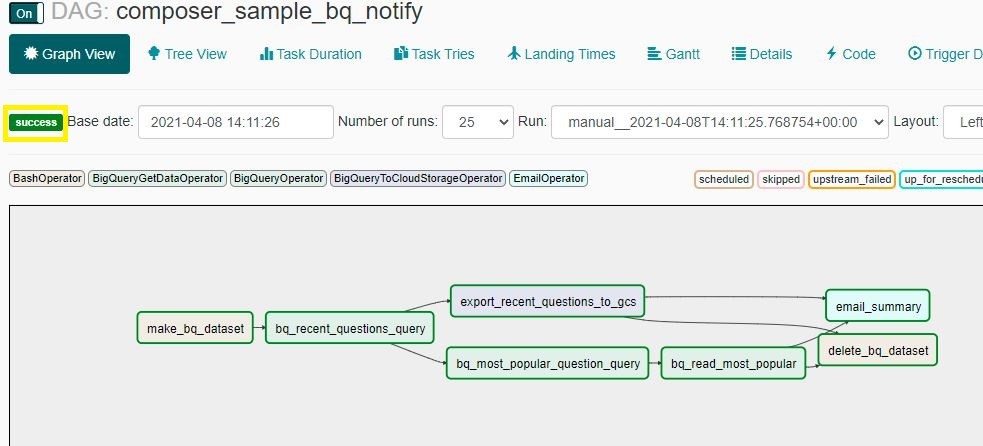

Step-7: You can see DAG connecting with multiple tasks which it has to perform.

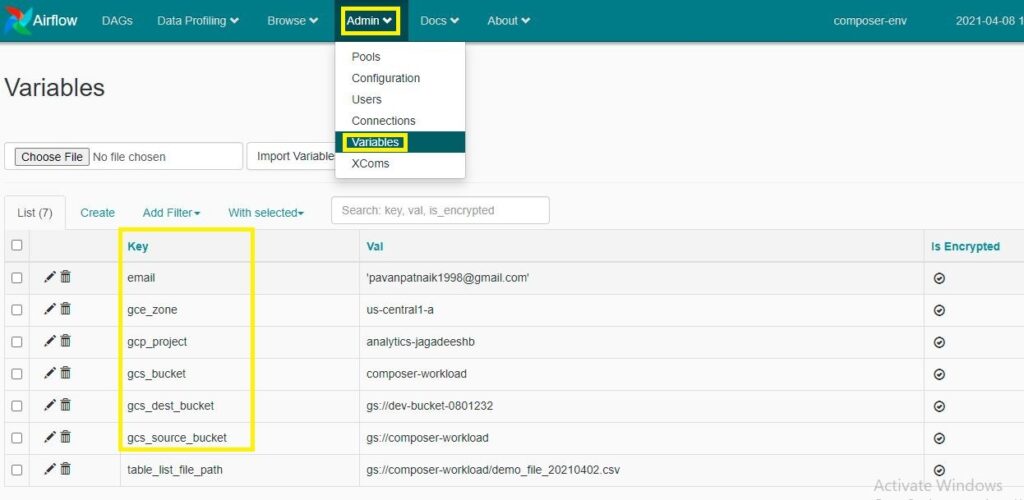

Step-8: Add Variables that are required to process the DAG.

Click on admin –> variables and then add required gcp_project, gcs_bucket and email to send updates.

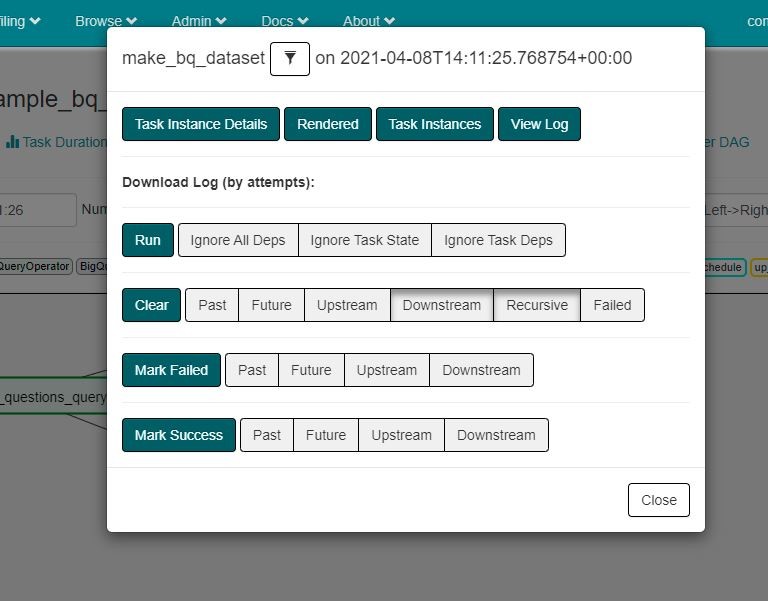

Step-9: Click on each task to perform any of the following operations. You can re-run and clear the execution of each task.

Step-10: Now click on ‘Trigger DAG’ to run your built DAG. DAG will start running with task 1, followed by the order. If the task complete, it turns to the color ‘Green’; If it is still running, it shows ‘Yellow’ on the canvas.

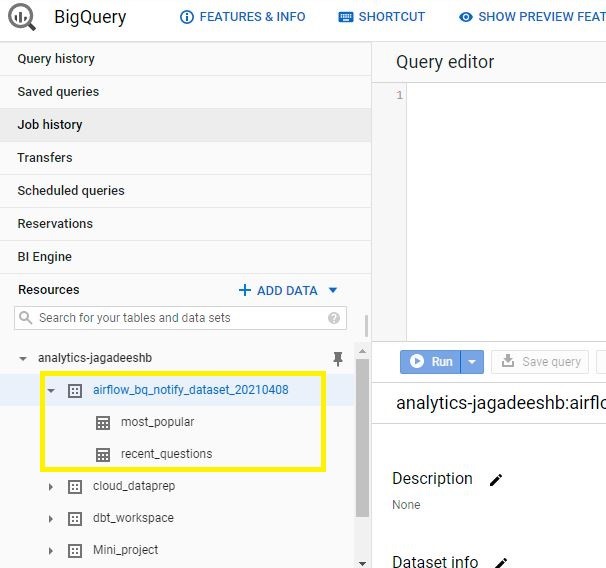

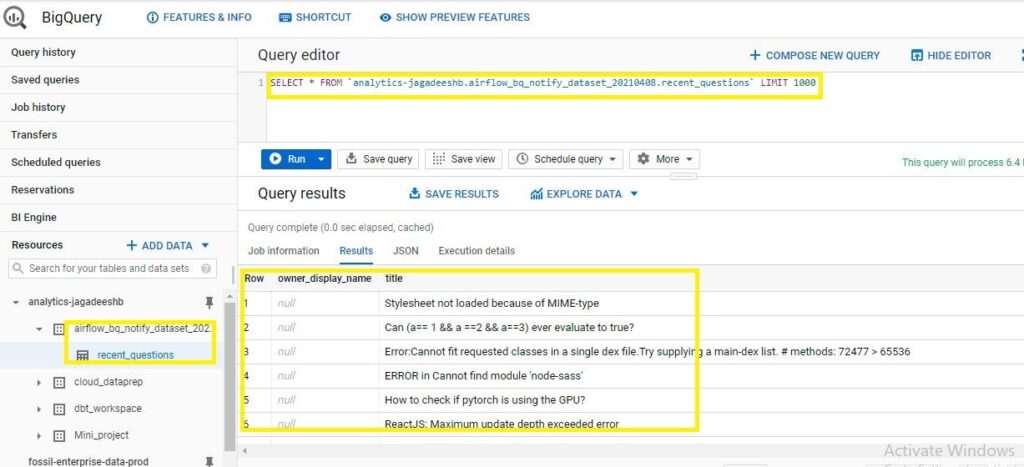

Step-11: Once the DAG started running, you can see tables created in BigQuery and data loads into them from the GCS bucket. Perform query operations on top of these tables as per requirement. Please go through the below screenshots to get an idea that how it works.

Step-12: DAG shows the status ‘SUCCESS’ once all the tasks mentioned in DAG execute.

Tool Exploration:

1. Click on each option available on the Airflow interface to explore the complete DAG. DAG details show you the complete information of DAG.

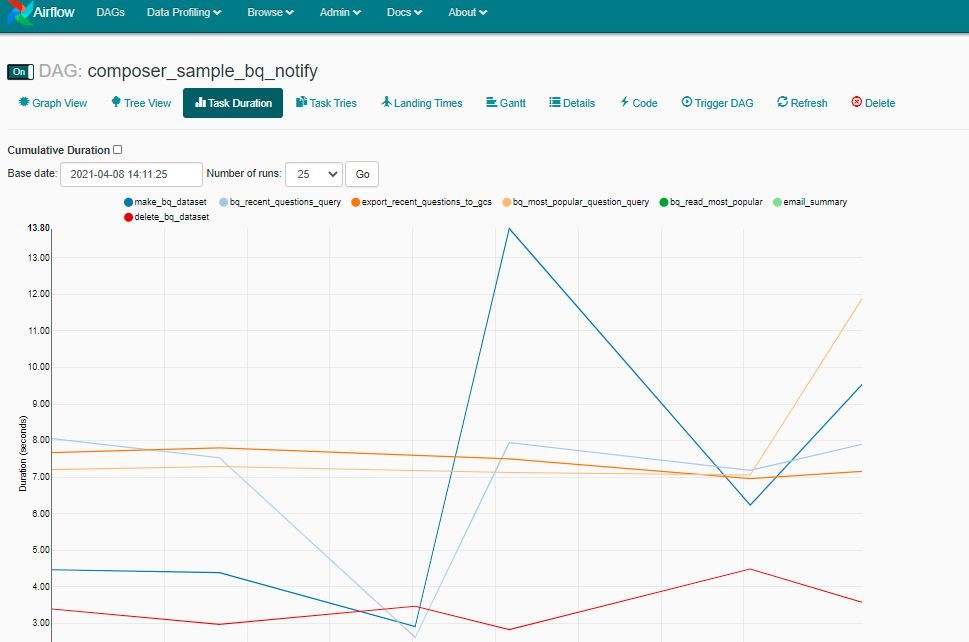

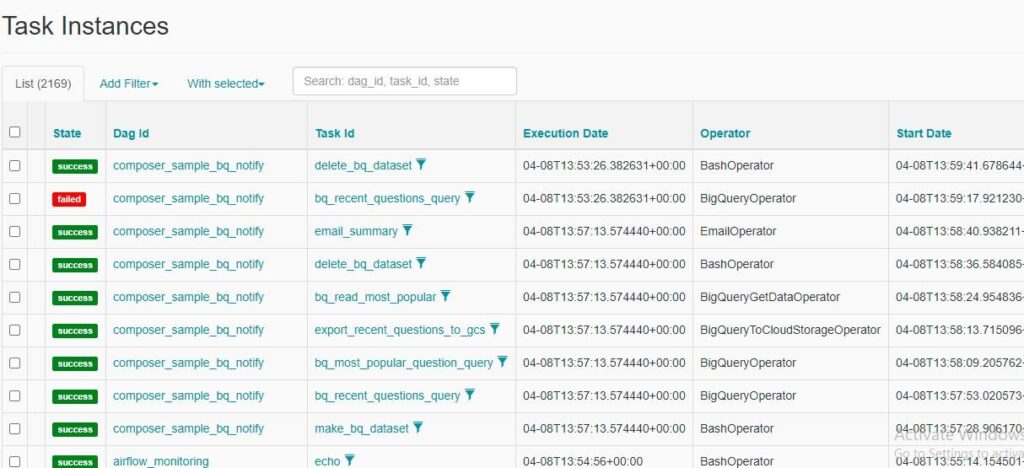

2. You can see the amount of each task takes time in the Task duration and task status in task instances.

References / Sources of the information referred:

Google cloud documents

Writing DAGs (workflows) | Cloud Composer | Google Cloud

Which MOURI Tech service, this article relates to (please refer to website service section) – Data and Analytics.

https://www.mouritech.com/services/business-data-engineering-analytics-sciences

Summary:

In this article, we have seen creating the DAG in a Composer environment. Cloud Composer provides the infrastructure to create and run Airflow workflows. We call these components Airflow Operators and the workflows are connections between these operators known as DAGs. DAG defines in a Python file, composes of the components such as A DAG definition, operators, and operator relationships. Before that, test your DAG(s) locally; we can now use the managed airflow service from GCP’s Cloud Composer. Make sure that the project created, and its corresponding billing is activated.

Pavan Kumar RUDHRAMAHANTI

Associate Software Engineer, Analytics (DWH & Data lakes).

MOURI Tech