Purpose of the article: This document helps to extract data from nested Subfolders using ADF

Intended Audience: This article will help our Organizational level kind of developers working on On-premises File systems to Azure SQL using Azure Data Factory

Tools and Technology: Azure SQL, on-premises File system

Keywords: Copy multiple files from Nested Subfolders from the File System to the Azure SQL Server using ADF

Problem Statement:

In certain situations, you may need to copy files from multiple nested subfolders dynamically and load each file data recursively into the database.

Solution:

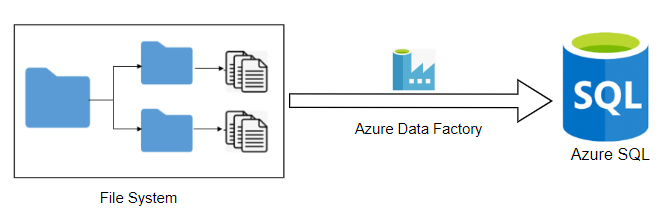

Flow Diagram:

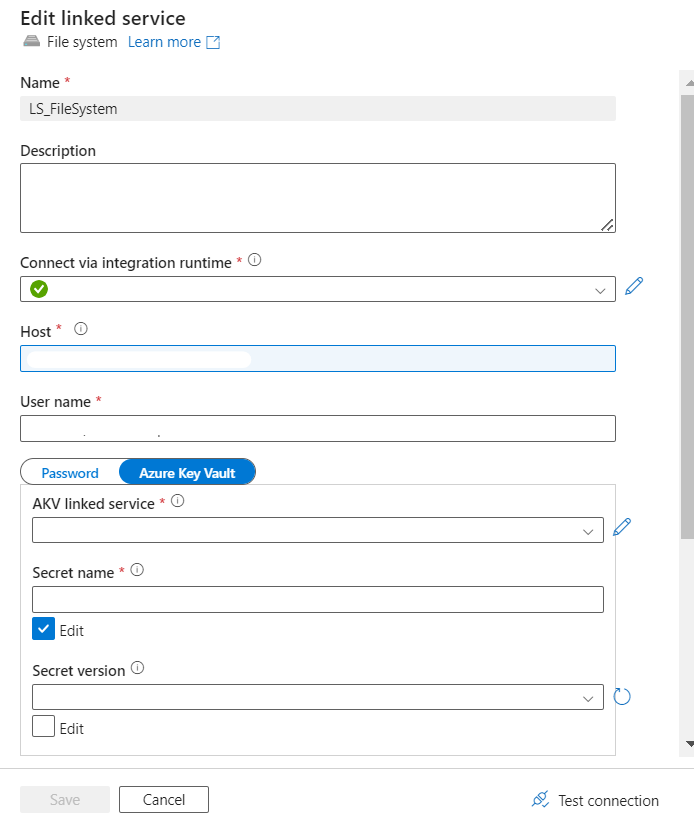

Step 1: Install ‘self-hosted integration run time’ in the On-Premises system to establish the connection between ADF and the File System.

Step 2: Create a pipeline in Azure Data Factory. One pipeline will act as the Parent pipeline and another pipeline will act as the Child pipeline.

Step 3: Create a Dataset and linked service to establish the connection to the File System in the On-Premises System.

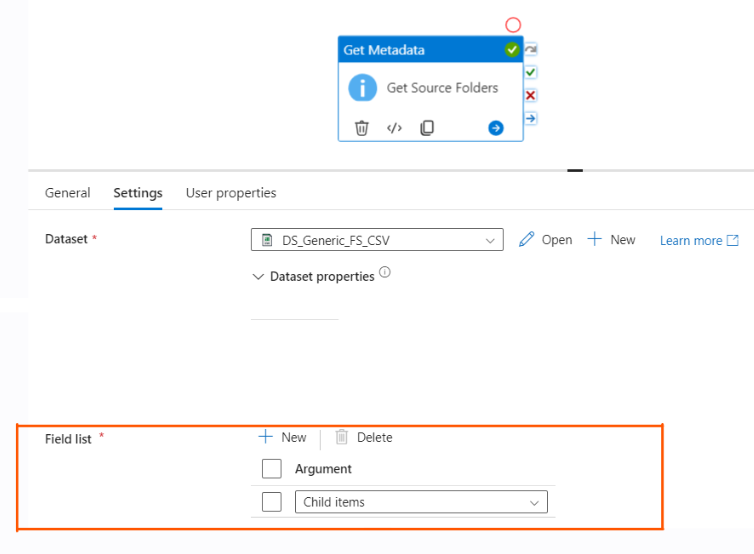

Step 4: Use ‘Get Metadata’ Activity to fetch details of the main Folders that are present in the File System.

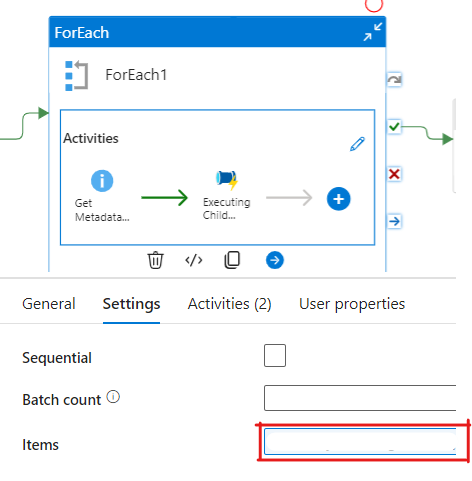

Step 5: Use the ‘ForEach’ Activity to iterate the main Folders that are coming for the Get Metadata Activity Output.

In the ‘For Each’ activity, in the ‘Settings’ option, we will find the ‘Items’ option. Here, we need to pass dynamic content that will come from the ‘Get Metadata activity Output child items’.

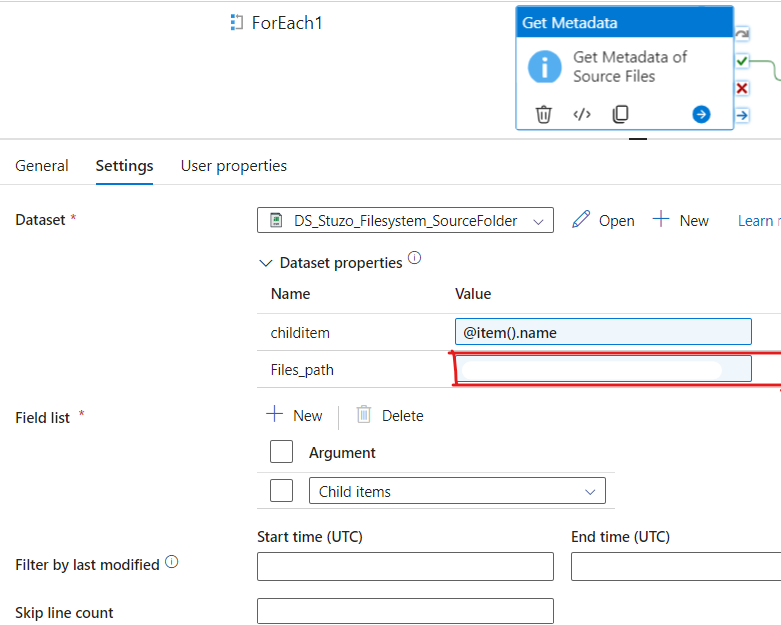

Step 6: Use the ‘Get Metadata’ Activity within ‘ForEach’ to iterate the files within the folder.

In the Files path, you need to add dynamic content as output child items of the ‘Get Metadata’ Activity.

Note:

To perform copying of multiple files, you must apply a ‘ForEach’ loop for each file. Since a nested ‘ForEach’ loop is not allowed, you must create a separate pipeline for each file copying task. Then, you must invoke these pipelines from the main pipeline using the Execute Pipeline Activity.

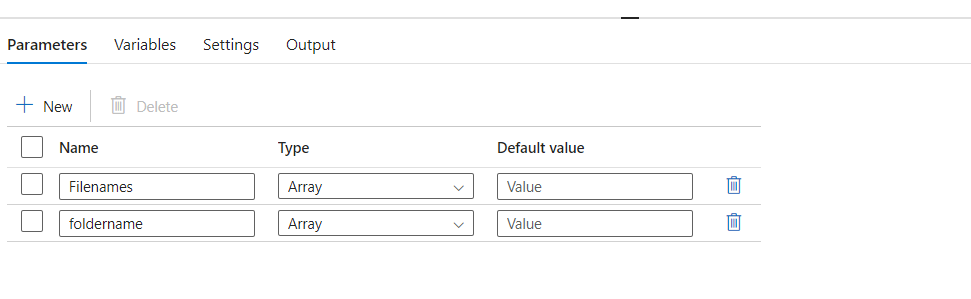

Step 7: Create a Child Pipeline with pipeline parameters.

You need to create the Child Pipeline with parameters because you need to run the Child Pipeline in the main pipeline by passing the folder name and file name as a parameter.

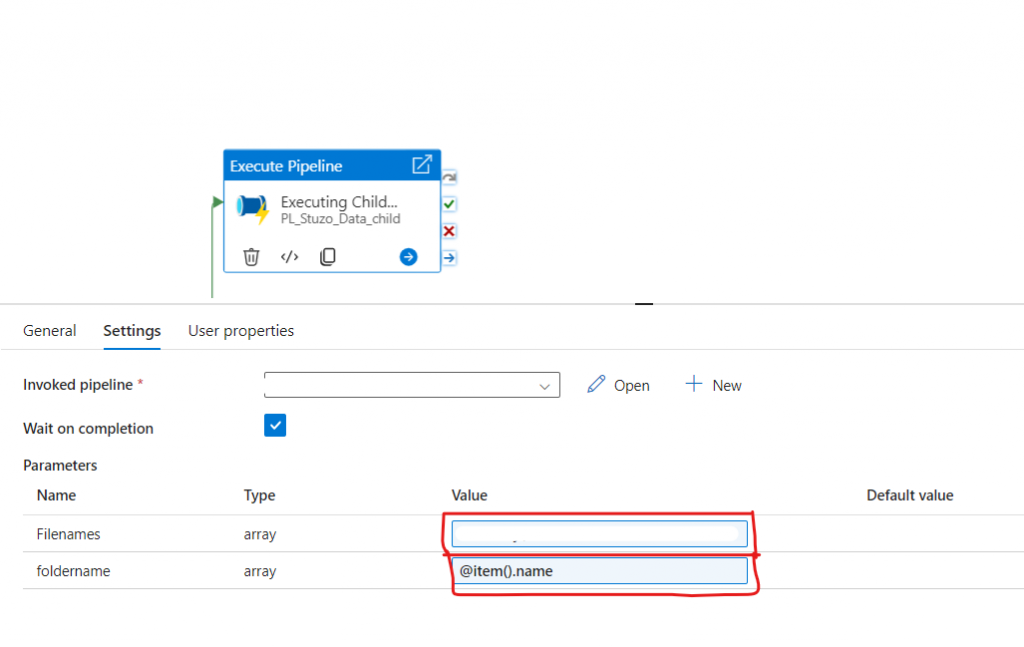

Step 8: Use Execute Pipeline Activity.

In the Filenames, pass the ‘GetMetadata’ output childitems and in the Folder name, pass the item that comes from ‘ForEach’.

Here, from ‘GetMetadata’ activity will fetch the file names, and the files are passed to the Child Pipeline.

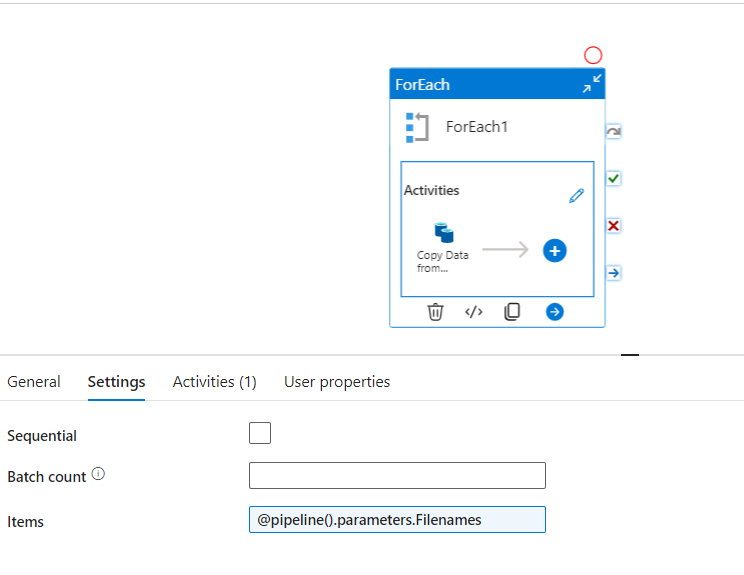

Step 9: Use the ‘ForEach’ Activity in the Child Pipeline.

To iterate the multiple files that are coming from the Parent Pipeline, you need to add the ‘ForEach’ Activity inside the ‘ForEach’ to use the copy activity to copy files from one location to another location.

In the items, you need to pass the pipeline parameters that you have created in the pipeline level.

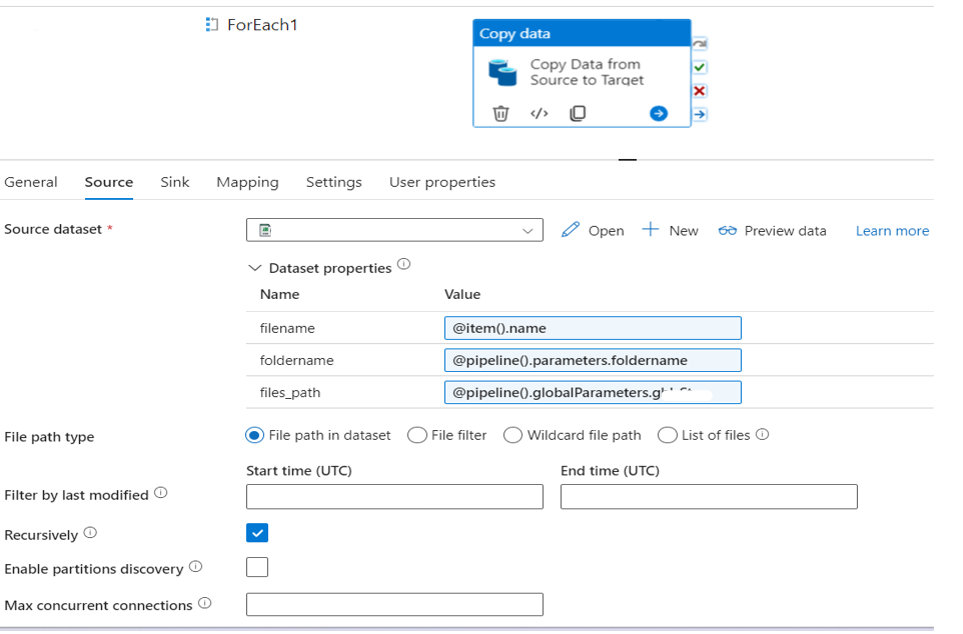

Step 10: Use Copy Activity inside the ‘ForEach’ Activity in the Child Pipeline:

‘ForEach’ will give the file name and need to copy that file from one location to another location, so you need to use the copy data Activity inside the ‘ForEach’.

In the File name, you need to pass the ‘ForEach’ item, and in the Folder name, pass the pipeline level parameter and the folder path needs to specify the folder path.

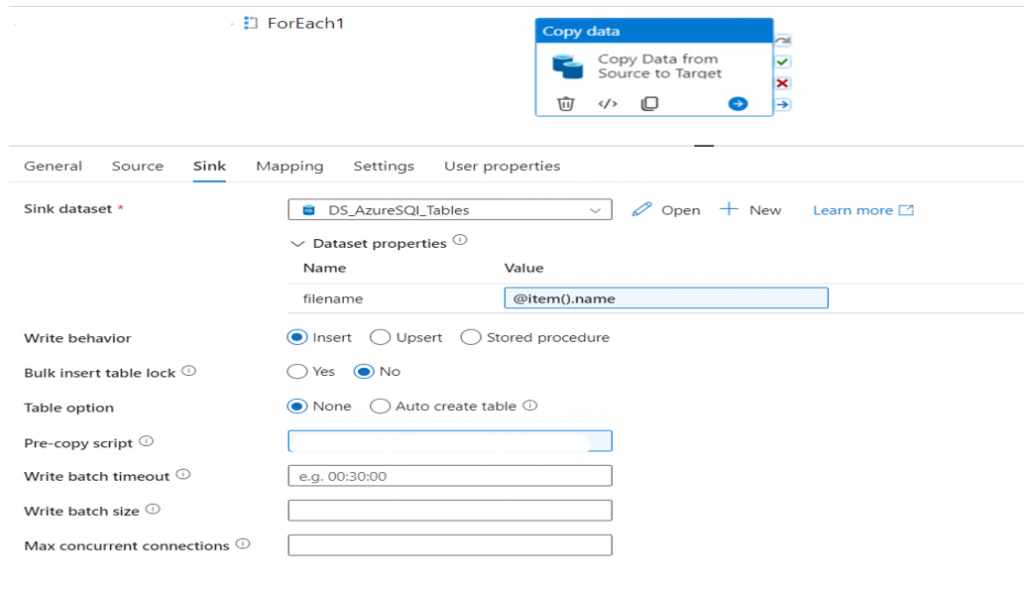

In the sink, pass file names in the ‘ForEach’ item so that the file will be saved as the Source file name.

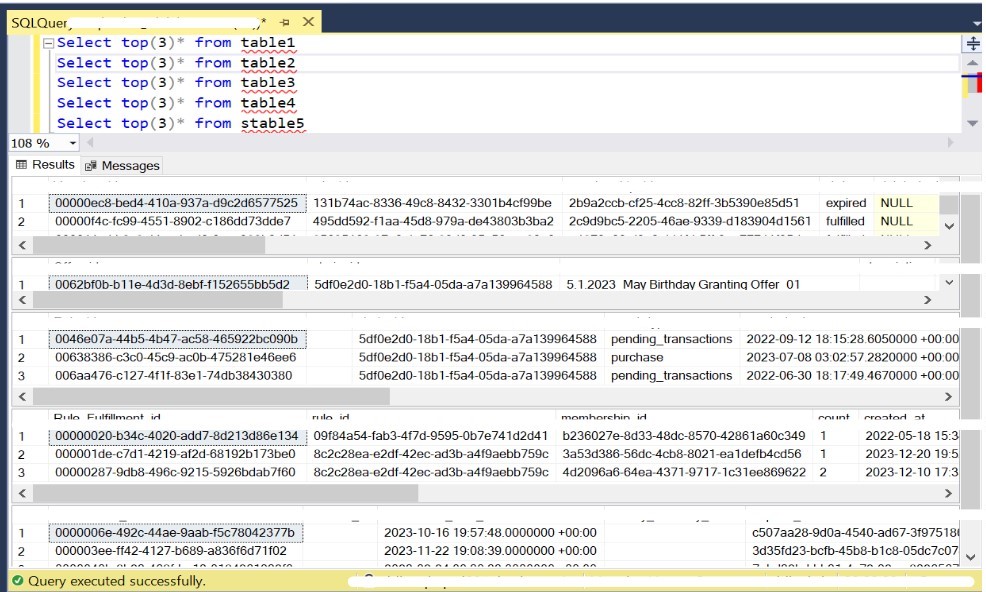

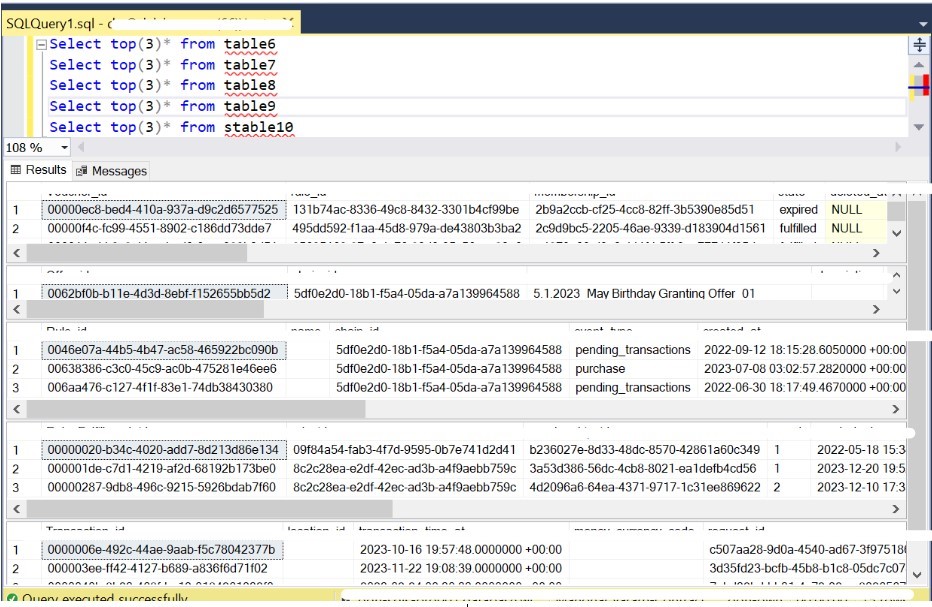

Once the pipeline Development is completed trigger the pipeline and check the data in the database.

Database Output Screenshots:

Conclusion:

In some situations, it is necessary to transfer multiple files from hierarchical folders to Azure SQL. By following the steps mentioned above, it is simple to obtain similar types of Requirements. Improving performance and optimizing costs is achievable through the above process.

Pre-requisites:

- Required Azure subscription.

- Install self-hosted Integration runtime in On-premises Environment.

- Required access to file path in File System.

Author Bio:

Manohar VARAM

Data Engineering Analytics - Technical Consultant

This is Manohar VARAM working as a Technical Consultant at MOURI Tech for the past 2.8 years. I have good experience in Azure Data Factory, Logic Apps, API Management, and SQL.