Purpose of the article: The article guides on migrating data from Amazon S3 to SNOWFLAKE using Storage Integration, ensuring secure and streamlined data transfer.

Intended Audience: The article targets data engineers and IT professionals responsible for data management and looking to efficiently migrate and secure data from Amazon S3 to SNOWFLAKE.

Tools and Technology: SNOWFLAKE, AWS services (IAM, S3)

Keywords: SNOWFLAKE, IAM, S3, Storage Integration, External Stage.

The main purpose of this blog is to connect AWS S3 to SNOWFLAKE without using AWS credentials (secret key and access key). SNOWFLAKE also provides an alternative method to connect to AWS services (especially S3) i.e., by altering the IAM role and policies. This procedure is an alternative approach to the usage of secret keys and is only preferred when the organization has no objection to updating the roles and policies.

Technology Stack:

S3:

S3 is used to store and retrieve any amount of data from anywhere on web at any time. It is easy to access, highly durable, secure & cost-efficient.

IAM Roles:

IAM role is an IAM identity you can create in your account and has specific permissions. It is like an IAM user in that it is an AWS identity with permission policies that determine what the identity can and cannot do in AWS, instead of being uniquely associated with one person.

IAM Policies:

IAM policies are JSON documents that define permissions. IAM policies are attached to identities (users, groups, or roles) within your AWS account, and help you control access to AWS resources by granting or denying permissions.

SNOWFLAKE:

SNOWFLAKE is a cloud-based data warehouse platform, helps organizations store, manage, and analyze large volumes of data using SQL queries. It offers scalability, flexibility, performance, allowing users to efficiently process diverse data types.

Storage Integration:

SNOWFLAKE object stores generated IAM users & set of allowed or blocked storage locations.

External Stage:

Use to store metadata, i.e., external data files such as S3 bucket URL and file format.

Artifacts:

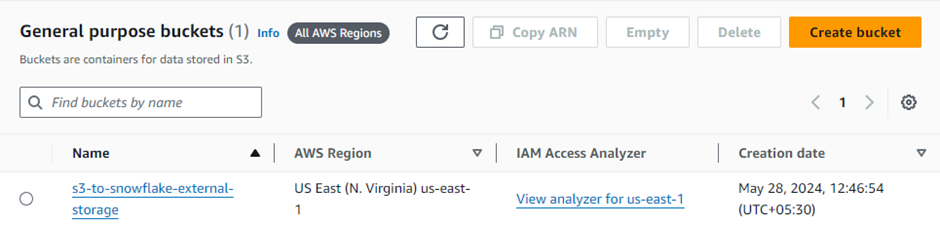

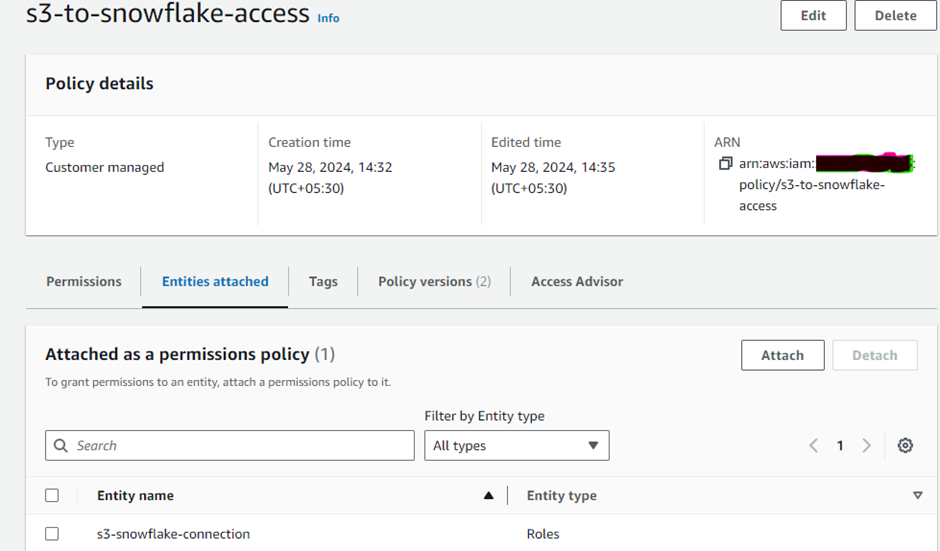

| Service | Name | Cost Incurred |

| AWS S3 Bucket | S3-to-snowflake-external-storage | $0 (No cost for creating or running SF) |

| AWS IAM role | s3-snowflake-connection | $0 (No cost for creating roles and policies) |

| AWS IAM policy | S3-snowflake-access | $0 (No cost for creating roles and policies) |

| SF Storage Integration | aws_s3_integration | |

| SF External Stage | s3_stage |

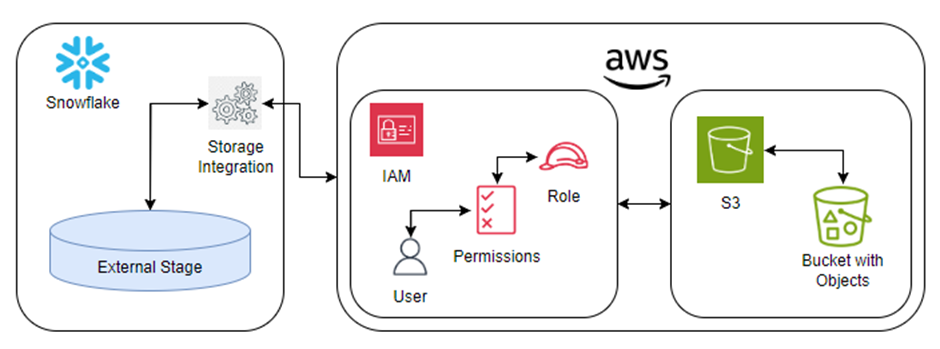

Architecture:

STEPS:

- Create S3 Bucket

- Create IAM policy

To create policies, you must follow the steps mentioned below:

- Search for IAM services → Choose policies → Click create policy

- Select server → Add Actions (check mark for All S3 Actions) → select specific actions

- Click Next

- Enter policy Name & policy description → Click create policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditore",

"Effect": "Allow",

"Action": [

"s3:Getobject",

"s3:ListBucket",

"s3:GetBucketLOCATION",

"s3:GetObjectVersion"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "s3:*",

"Resource": "arn:aws: s3:*:******:access-grants/default/grant/*"

}

- Create IAM role

To create role, you must follow the steps mentioned below:

- Search for IAM services → Choose role → Click create role

- Select AWS account → Another AWS Account → specify 12-digit Account-ID

- Click Next

- Search and attach policy created in above step2.

- Click Next

- Enter role name & role description → Click create role

- Cloud Storage Integration:

create storage integration aws_s3_integration

type = external_stage

storage_provider = s3

enabled = true

storage_aws_role_arn ='<iam_role>'

storage_allowed_locations = ('<bucket>/<path>');

create or replace storage integration aws_s3_integration

type = external_stage

storage_provider = $3

enabled = true

storage_aws_role_arn = 'arn:aws:iam:*****role/s3-snowflake-connection'

storage_allowed_locations = ('s3://s3-to-snowflake-external-storage');

NOTE: Cloud Storage Integration holds external storage metadata such as storage provider, role (generated from IAM service), object storage (AWS S3) location. With external storage, there is no need to provide credentials when setting up stages or loading data.

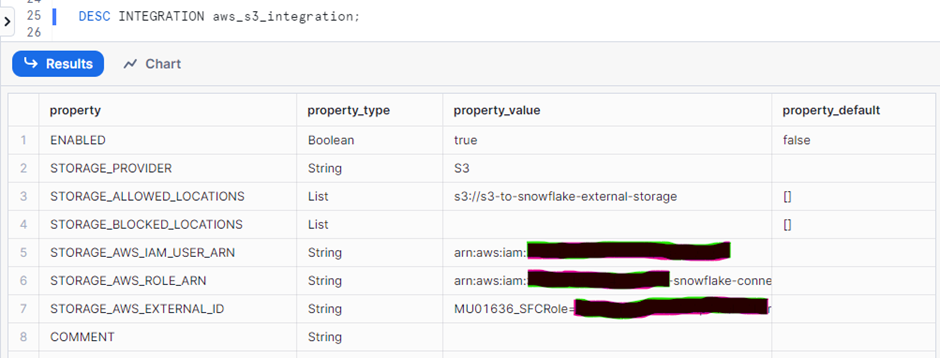

- IAM User SNOWFLAKE Account:

DESC INTEGRATION <Integration_name>;

Here’s the command to retrieve STORAGE_AWS_IAM_USER_ARN and STORAGE_AWS_EXTERNAL_ID. These IDs will be used to edit the IAM role Trust Relationship created in step 3.

- Update IAM Role Policy for SNOWFLAKE:

In this step, you must update the trust relationship of the IAM role created earlier and use the values captured in previous step. Update the values marked in yellow to make sure the IAM role now points towards SNOWFLAKE with proper permissions to S3.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": ""

"Effect": "Allow",

"Principal": {

"AWS": "<snowflake_user_arn>

},

"Action": "sts: AssumeRole",

"Condition": {

"StringEquals": {

"sts: ExternalId": "<snowflake_external_id>"

}

}

}

]

}

- Create an External Stage:

Create an external (i.e. S3) stage, references to Storage Integration created in above step 4. Create Cloud Storage Integration in SNOWFLAKE.

GRANT CREATE STAGE ON SCHEMA <schema_name> TO ROLE <role_name>;

GRANT USAGE ON INTEGRATION <integration_name> TO ROLE <role_name>;

USE SCHEMA <data_base_name>.<schema_name>;

create or replace stage <stage_name>

URL = ‘<S3_path>’

storage_integration = ‘<integration_name>’;

--Permission to create stage

GRANT CREATE STAGE ON SCHEMA TEST_AWS TO ROLE ACCOUNTADMIN

GRANT USAGE ON INTEGRATION aws_s3_integration TO ROLE ACCOUNTADMIN

-- creating stage

USE SCHEMA TEST_DB.TEST_AWS;

create or replace stage s3_stage

URL= 's3://s3-to-snowflake-external-storage'

storage_integration=aws_s3_integration;

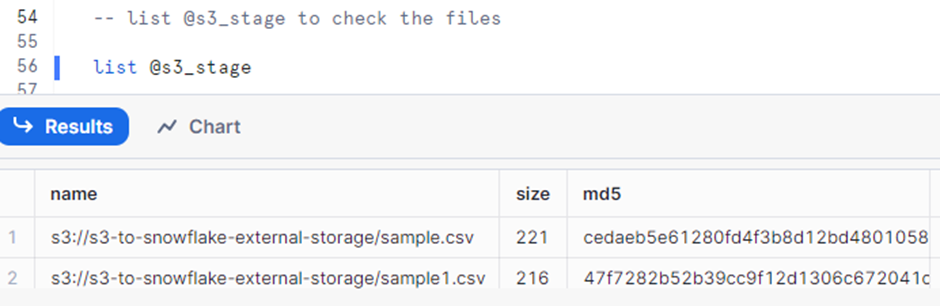

- List SNOWFLAKE Stage:

List stage to see the files are present, thus completing the integration between SNOWFLAKE & AWS.

list @<stage_name>;

- Table creation in SNOWFLAKE:

Table creation statement lays out the structure for storing data within SNOWFLAKE data warehouse.

Table creation

USE TEST_DB;

create table TEST_AWS. EMPLOYEE(

emp_nbr int,

emp_sal int,

ins_date varchar (50),

src_sys_key varchar (50)

);

- Command to Load Data into SNOWFLAKE:

This SQL command is used to copy data from files stored in an Amazon S3 bucket into table. The data is read from location defined by the variable to the Stage created by providing with required file type and delimiter.

COPY INTO <table_name>

FROM <stage_name>

FILE_FORMAT = (TYPE = 'file_type' FIELD_DELIMITER = 'delimiter_type' SKIP_HEADER = 1);

-- Copy command

COPY INTO EMPLOYEE

FROM @s3_stage

FILE_FORMAT = (TYPE = 'CSV'FIELD_DELIMITER =',' SKIP_HEADER = 1);

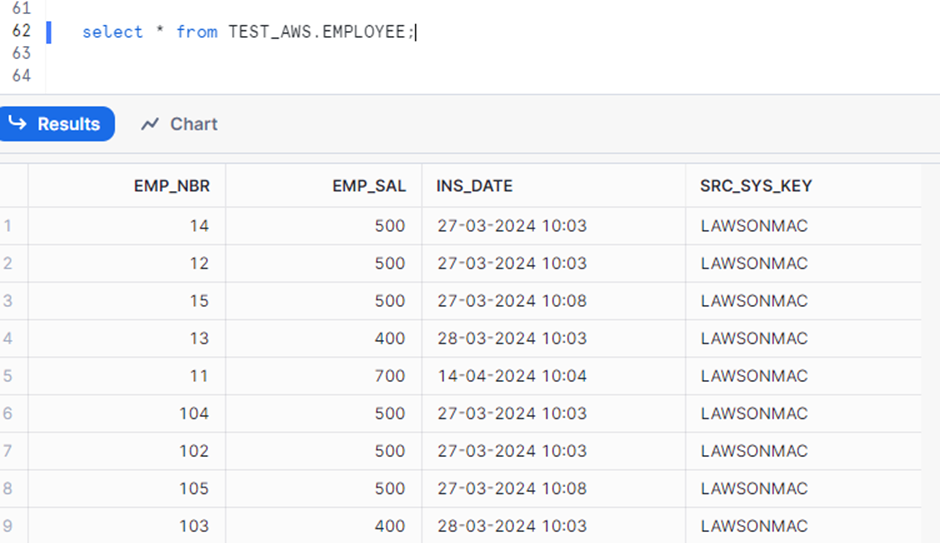

- Output:

Advantages:

- If IAM access is not provided to the end-user or customer, then this is a secured connection with no requirement for AWS credentials.

- You can control the permissions of how much access to be provided on S3 for SNOWFLAKE using IAM roles and policies.

References:

Author Bio:

Sainath Reddy AVULA

Associate Software Engineer - Data Engineering-Analytics

I’m Sainath Reddy AVULA, and I've been with MOURI Tech for one year as Associate Software Engineer in Data Engineering. Possess good knowledge in AWS Services and expertise in SNOWFLAKE and SQL.