Purpose of the article: To explain how Large Language Models (LLMs) can be utilized to convert natural language queries (NLP) into SQL queries effectively. It aims to demonstrate the capabilities of LLMs in enhancing the process of querying databases.

Intended Audience: Data Analyst, Data Scientists, Database Administrators, Data Engineers, Software Engineer and Developers.

Tools and Technology: Python, Flask, SQLite, LLM, Langchain.

Keywords: NLP2SQL, Natural Language Understanding, Data Retrieval, Text-to-SQL, SQL Query Generation.

What is LLM?

LLM stands for Large Language Model. It refers to sophisticated Artificial Intelligence models trained on vast amounts of data. They are pivotal in applications ranging from Natural Language understanding and generation to improving the efficiency of various tasks like translation, summarization, and dialogue systems.

Example of LLMs: OpenAI’s GPT series, Google’s BERT or Mistral etc.,

Why LLM?

LLMs are pivotal due to their ability to handle complex Natural Language tasks with high accuracy. LLMs enhance user interactions with applications, making them crucial for advancing AI-driven capabilities across diverse domains.

NLP to SQL refers to the process of converting Natural Language queries into Structured Query Language (SQL) commands that can be executed on databases. This involves leveraging Natural Language Processing (NLP) techniques and often advanced models like Large Language Models (LLMs). NLP to SQL systems interpret the intent behind user queries, analyze the semantics of sentences, and generate corresponding SQL queries to retrieve relevant data from databases.

These systems aim to bridge the gap between human language understanding and database querying, enabling more intuitive and efficient interactions with data-driven applications and services.

Step-by-Step process of converting Natural Language to SQL queries:

- Install the packages.

pip install flask

pip install pandas

pip install python-dotenv

pip install sqlite3

pip install langchain-openai

pip install langchain

pip install langchain-community

- Import the packages.

from flask import Flask, request

import sqlite3

from langchain_openai_llm import get_llm

from langchain_community.utilities import SQLDatabase

import pandas as pd

from langchain_openai import AzureChatOpenAI

from dotenv import load_dotenv

import os

load_dotenv()

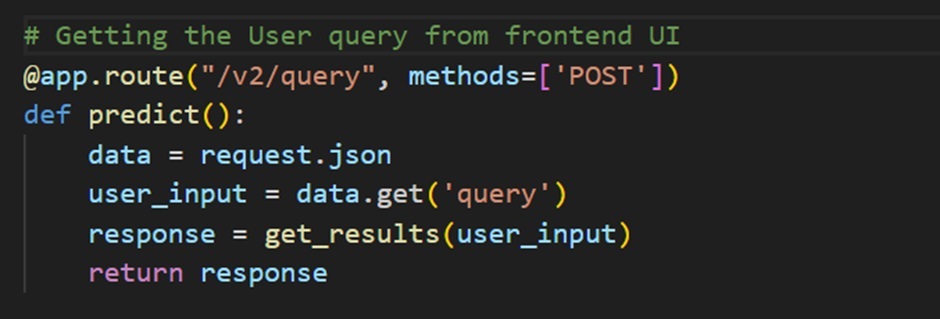

- Passing User queries in Natural Language can be done via frontend UI or, in our case, through the Postman API platform.

#Getting the User query from frontend UI

@app.route("/v2/query", methods=['POST'])

def predict():

data = request.json

user_input = data.get('query')

response = get_results(user_input)

return response

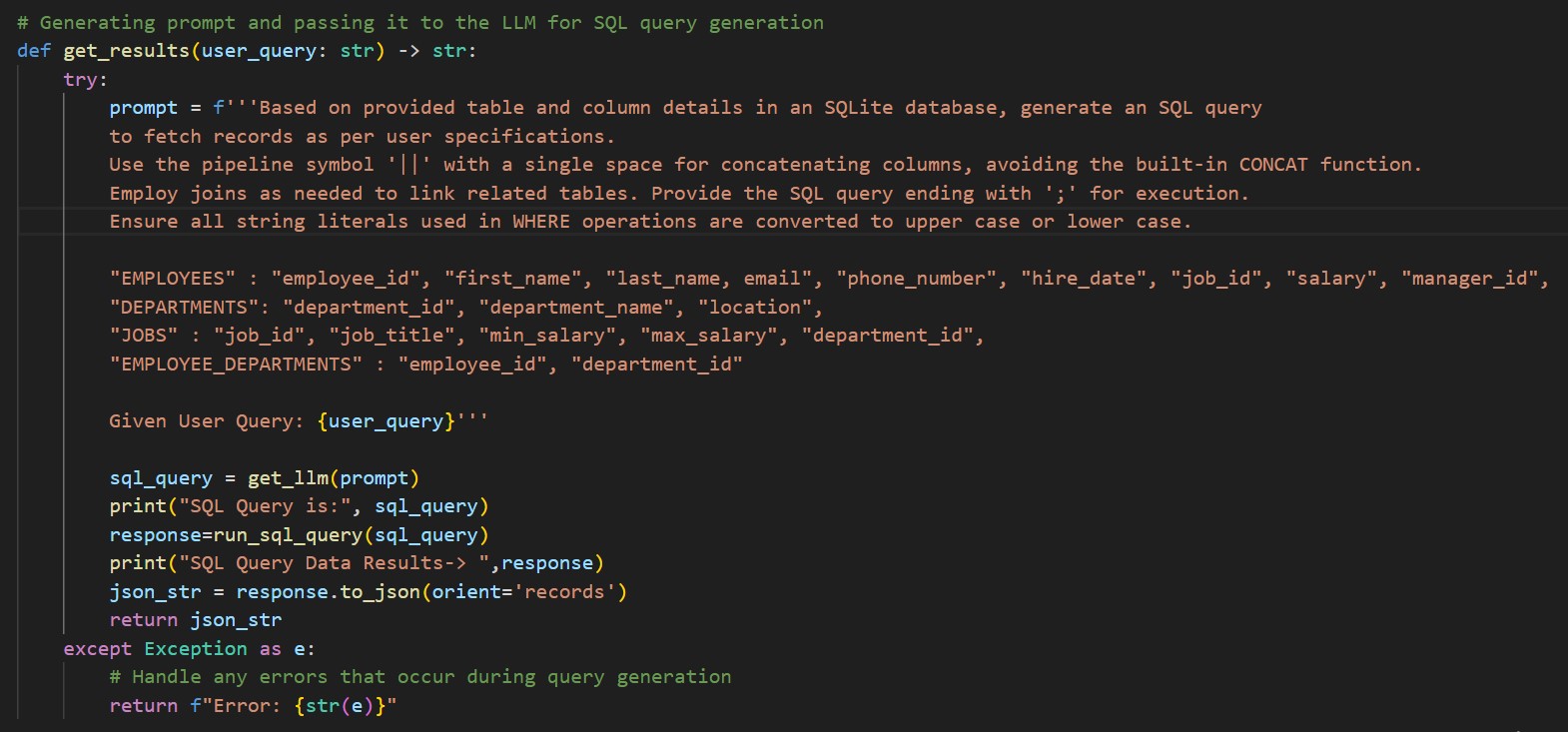

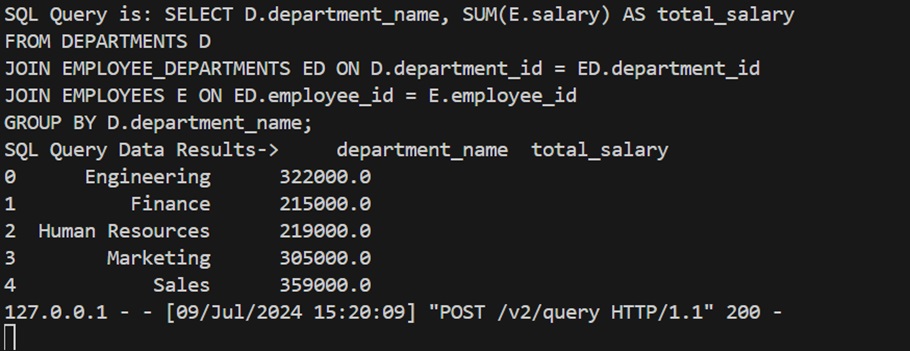

- Generating prompts and passing them to the LLM model for SQL query generation based on user specifications and provided table data. Once the SQL query is generated, it is executed at the database level to fetch relevant records.

#Generating prompt and passing it to the LLM for SQL query generation

def get_results(user_query: str) -> str:

try:

prompt = f'''Based on provided table and column details in an SQLite database, generate an SQL query to fetch records as per user specifications.

Use the pipeline symbol '||' with a single space for concatenating columns, avoiding the built-in CONCAT function. Employ joins as needed to link related tables. Provide the SQL query ending with ';' for execution. Ensure all string literals used in WHERE operations are converted to upper case or lower case.

"EMPLOYEES": "employee_id", "first_name", "last_name, email", "phone_number", "hire_date", "job_id", "salary", "manager_id",

"DEPARTMENTS": "department_id", "department_name", "location",

"JOBS" : "job_id", "job_title", "min_salary", "max_salary", "department_id",

"EMPLOYEE_DEPARTMENTS": "employee_id", "department_id"

Given User Query: {user_query}'''

sql_query = get_11m(prompt)

print("SQL Query is:", sql_query)

response=run_sql_query(sql_query)

print("SQL Query Data Results-> ", response)

json_str = response.to_json(orient='records')

return json_str

except Exception as e:

# Handle any errors that occur during query generation

return f"Error: {str(e)}"

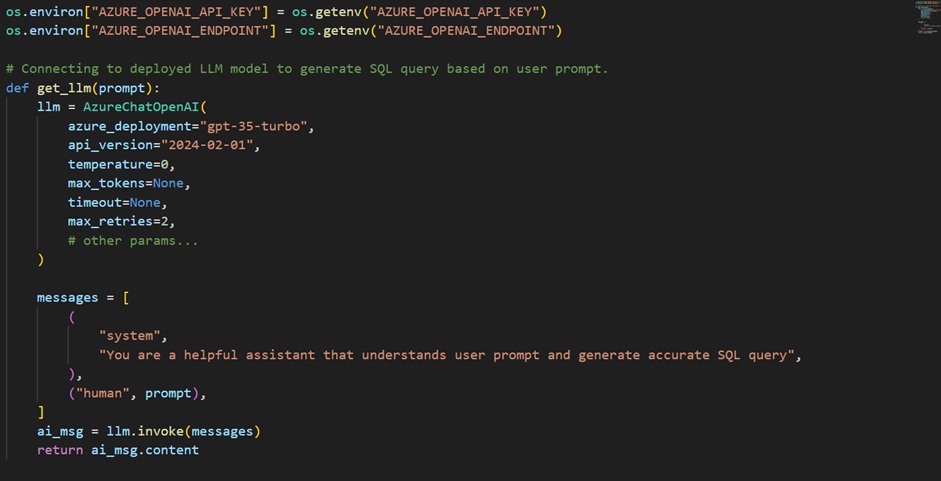

- Connecting and passing prompt to deployed LLM model which will in return generate SQL query and pass it back.

os.environ["AZURE_OPENAI_API_KEY"] = os.getenv("AZURE_OPENAI_API_KEY")

os.environ["AZURE_OPENAI_ENDPOINT"] = os.getenv("AZURE_OPENAI_ENDPOINT")

# Connecting to deployed LLM model to generate SQL query based on user prompt.

def get_11m (prompt):

11m = AzureChatOpenAI(

azure_deployment="gpt-35-turbo",

api_version="2024-02-01",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2,

# other params...

)

messages = [

(

"system",

"You are a helpful assistant that understands user prompt and generate accurate SQL query"

),

("human", prompt),

]

ai_msg = 11m.invoke(messages)

return ai_msg.content

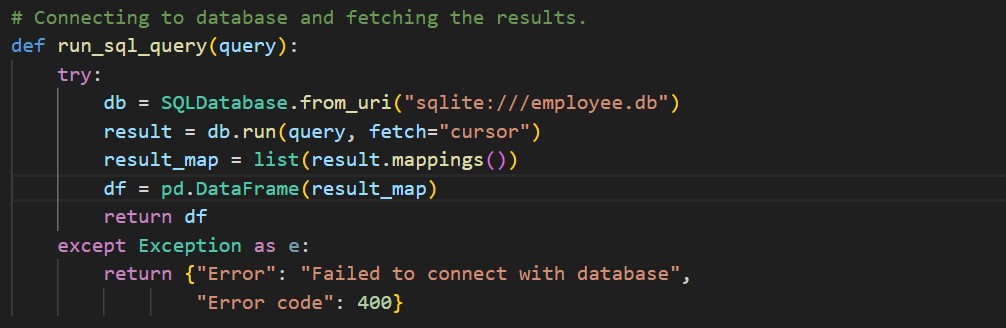

- Executing the generated SQL query on the database to retrieve relevant records.

Connecting to database and fetching the results.

def run_sql_query(query):

try:

db = SQLDatabase.from_uri("sqlite:///employee.db")

result = db.run(query, fetch="cursor")

result_map = list(result.mappings())

df = pd.DataFrame (result_map)

return df

except Exception as e:

return { "Error": "Failed to connect with database", "Error code": 400}

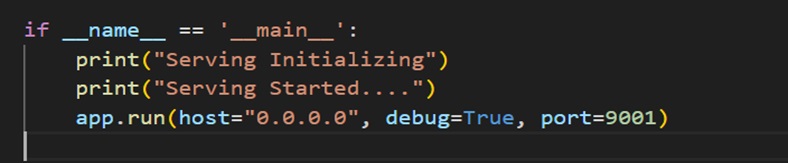

- Initializing the server on port 9001.

if _name_ == '___main__':

print("Serving Initializing")

print("Serving Started....")

app.run(host="0.0.0.0", debug=True, port=9001)

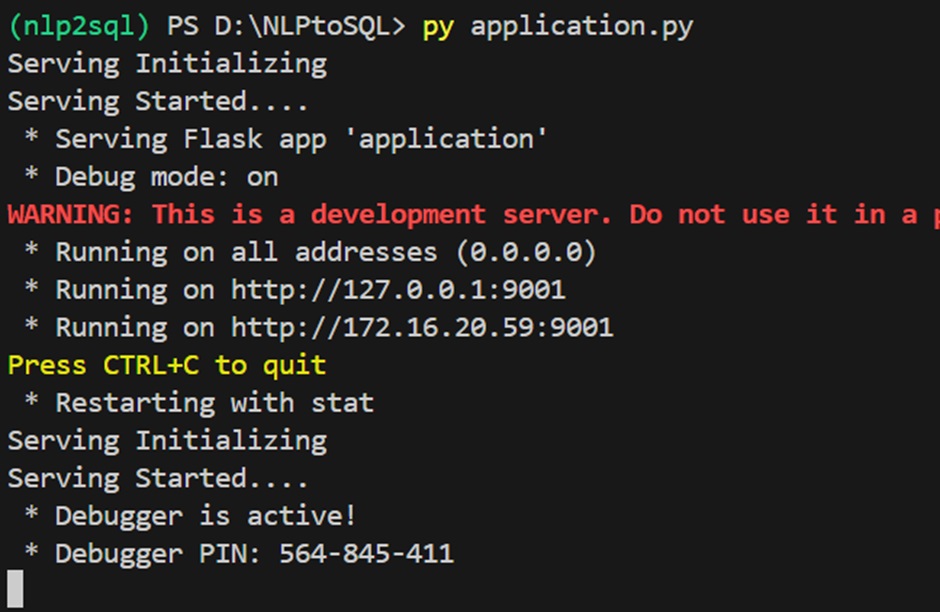

Output:

Server Started on port 9001

http://127.0.0.1:9001

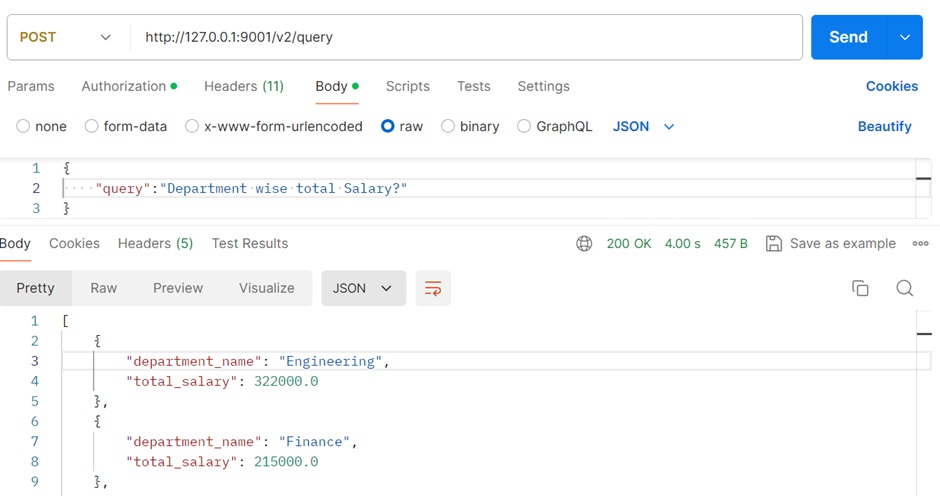

Passing user query using Postman API.

Query: Department wise total Salary?

References:

https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/chatgpt

https://python.langchain.com/v0.2/docs/integrations/llms/azure_openai/

https://python.langchain.com/v0.2/docs/integrations/chat/azure_chat_openai/

https://python.langchain.com/v0.2/docs/integrations/tools/sql_database/

https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/switching-endpoints

Author Bio:

Vinod Kumar Reddy KOMMA

Analyst - Advances Analytics

I'm Vinod Kumar Reddy KOMMA, and I've been an Analyst in Advanced Analytics at MOURI Tech for three years. Possess solid experience with Python, SQL programming languages, Azure Cloud and Basics of AI & ML