- Purpose of the article: In this blog, we have explained how we can Load JSON data from Azure BLOB to snowflake using Matillion ETL.

- Intended Audience: This POC/blog will help the organization employees working on Matillion ETL.

- Tools and Technology: Matillion, Azure BLOB, Snowflake

- Key words: implement JSON data loading to snowflake using matillion.

Description:

The JSON file in Azure Blob storage should be loaded into Snowflake Datawarehouse using Matillion ETL.

Data Flow:-

SOLUTION:

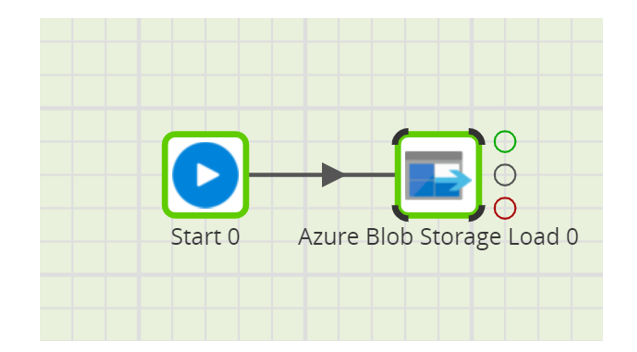

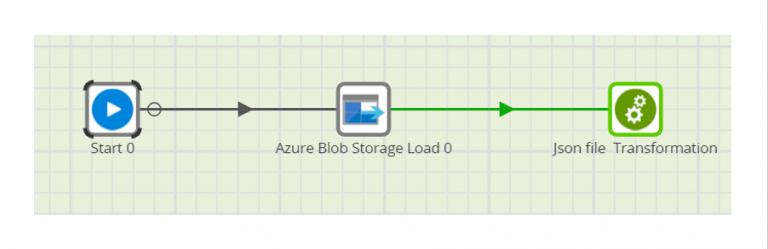

Orchestration Job:

Take Orchestration Job in Matillion, Add Azure Blob Storage Component to Load the data from Blob to Snowflake.

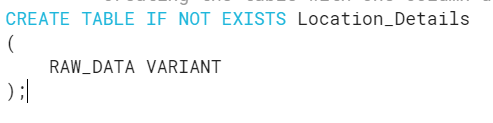

Step 1:

When trying to load a JSON file from Azure blob to Snowflake, create one table with one column by using a variant datatype to that column.

This helps load the raw JSON data into that table.

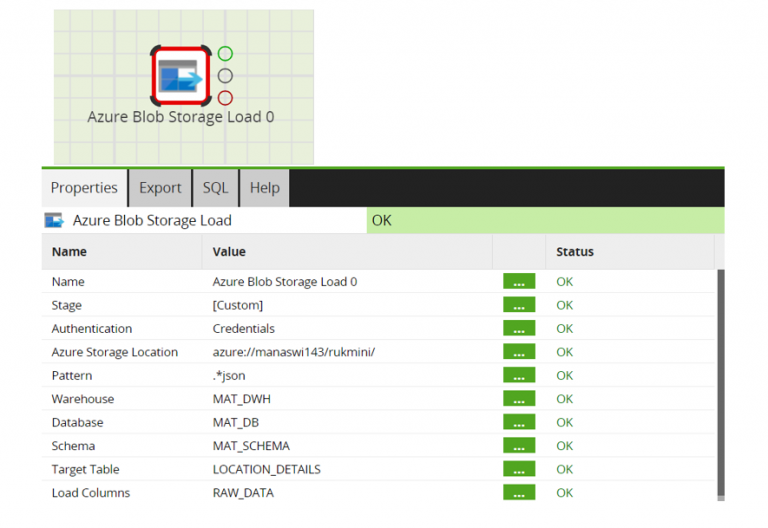

Step 2:

Give a valid storage location to access the file in Azure blob and provide Target table name, which was created in Snowflake to load the raw JSON data.

Mention the pattern as .*JSON to read JSON files and to avoid reading different file formats from the Azure blob location.

Step 3:

Validate and run the entire job.

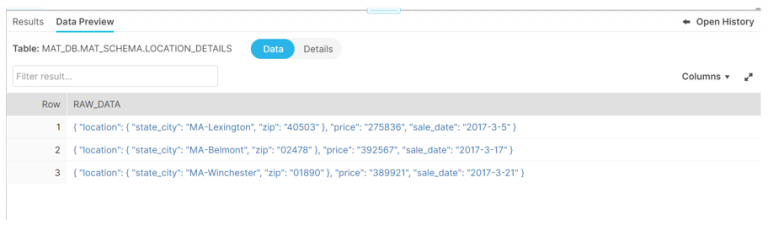

Check the table in Snowflake to see whether the JSON data is loaded.

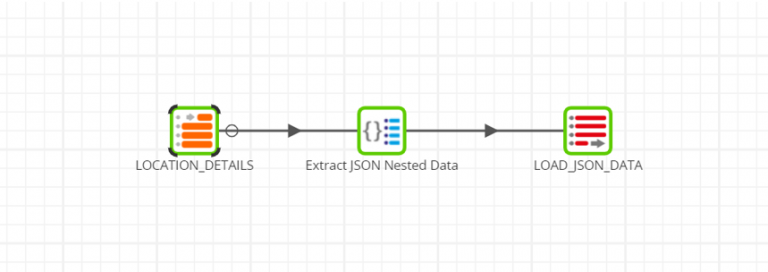

Transformation Job:

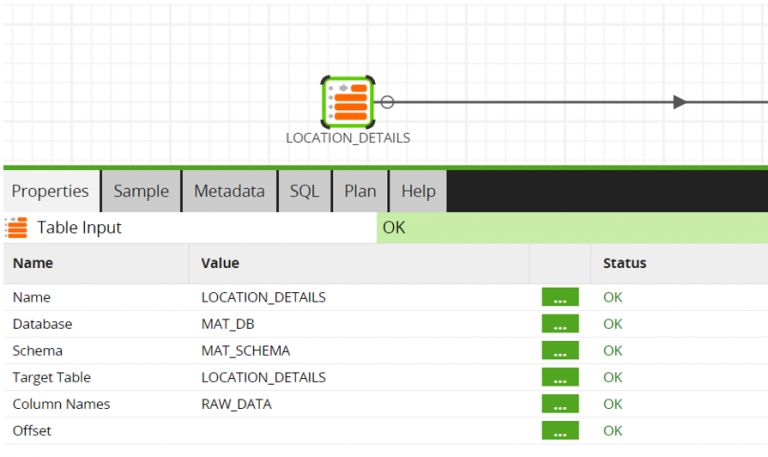

Step 1:

After loading raw JSON data into one table, Load the whole JSON data in the format of rows and columns.

Add the transformation job in Matillion ETL; take the Table Input component and mention the Target table in which raw JSON data was loaded into the table.

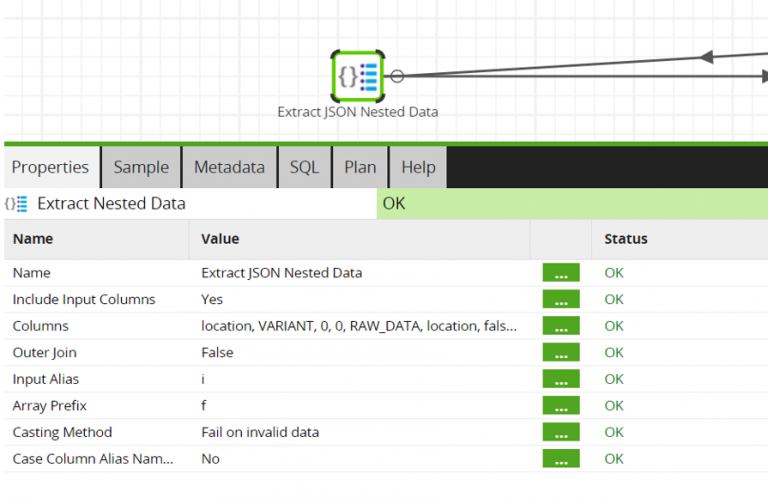

Step 2:

Take the Nested data component to flatten nested data into rows

This is done by taking nested data as key: value pairs (such as a JSON dictionary) and using those keys as column names.

Provide required columns to extract JSON data

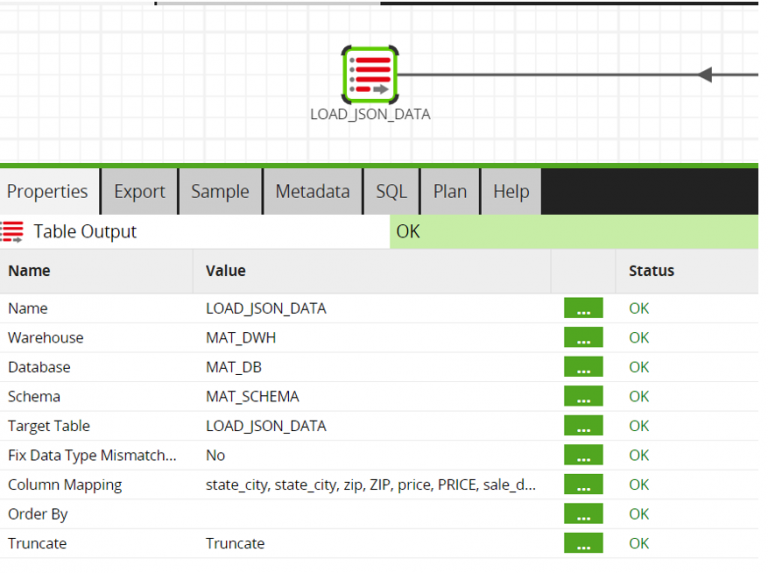

Step 3:

Take the Table output component to load extracted JSON data into rows and columns.

Create the table in Snowflake with the required columns to load JSON data and map the columns to load match column names with the target table.

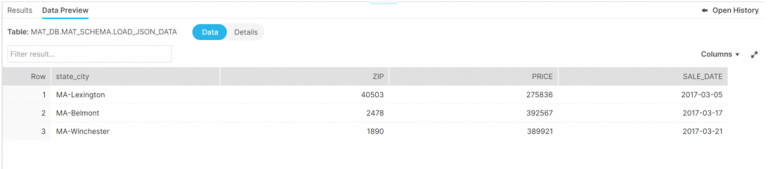

Output:

Entire Flow:

Author Bio:

Rukmini Vuppala

Associate Software Engineer

Hello, I am Rukmini Vuppala. I am as Associate Software Engineering in MOURI Tech for the past 1.5 years. I have good experience in Azure, Matillion, Snowflake, SQL