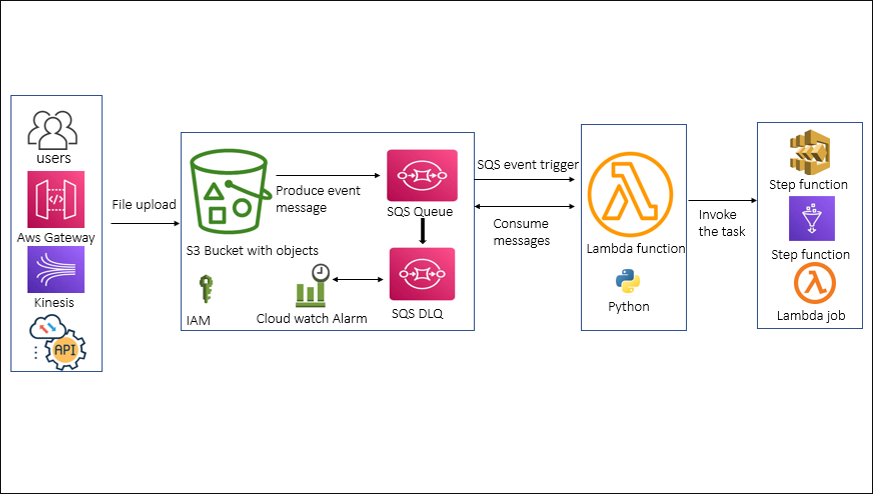

- Purpose of the Article: In this blog, we have explained how we can use AWS STEP FUNCTION and orchestrate our ETL pipelines on cloud

- Intended Audience: This blog will assist you in creating event-based services that can start or trigger services or jobs. This blog describes how to connect a simple queue service to S3 and Amazon Lambda

- Tools and Technology: AWS Services (S3, Lambda, Glue, SQS, IAM, Cloud Watch), python

- Keywords: Lambda Function, SQS, S3

The primary purpose of this blog is to recreate a small portion of an event-based project that is widely used by numerous enterprises. This blog explains how to configure AWS Lambda using AWS SQS. This setting is useful when we need to utilize Lambda to process a message from SQS.

STEP – 1:

Simple Queue Service:

Amazon SQS is a web service that provides access to a message queue, which may be used to hold messages while they are being processed by a computer.

Setup SQS events to S3 bucket:

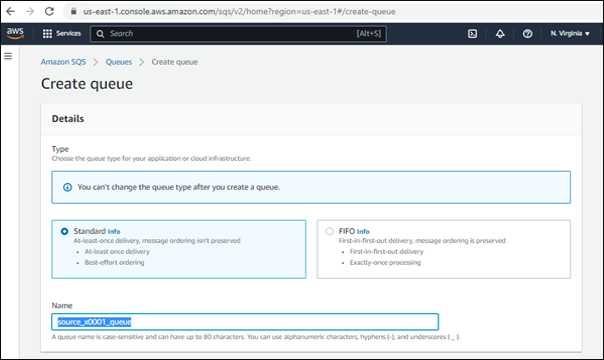

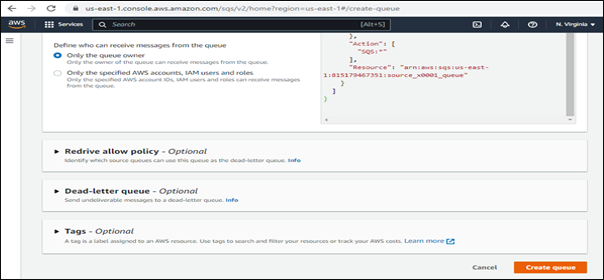

- To build an Amazon SQS queue (console), Open the Amazon SQS console at https://console.aws.amazon.com/sqs/ and Select Create a queue.

2. The Standard queue type is selected as the default for Type. Choose FIFO to build a FIFO queue.

3. Enter a name for your queue. The name of a FIFO queue must conclude with the .fifo suffix. The suffix counts toward the 80-character queue name limit. Check whether the queue name ends with the suffix To see if it is FIFO, choose the Create Queue option.

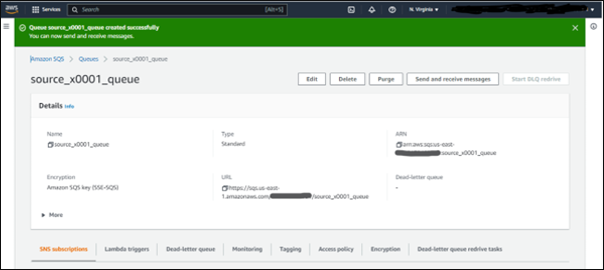

- You will notice that the Queue was successfully formed.

STEP – 2:

AWS S3 Bucket:

S3 is an abbreviation for Simple Storage Service. S3 offers developers and IT teams safe, long-lasting, and highly scalable object storage. It is simple to use and has a basic web services interface for storing and retrieving data from anywhere on the internet.

Create S3 Bucket in AWS:

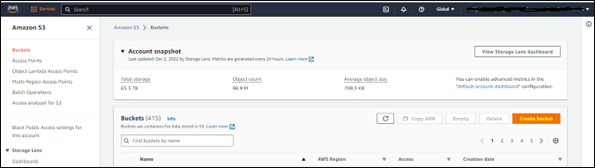

- Sign into the AWS Management Console and go to https://console.aws.amazon.com/s3/ to access the Amazon S3 interface. Choose Buckets from the left-hand navigation menu. Choose the Create bucket option.

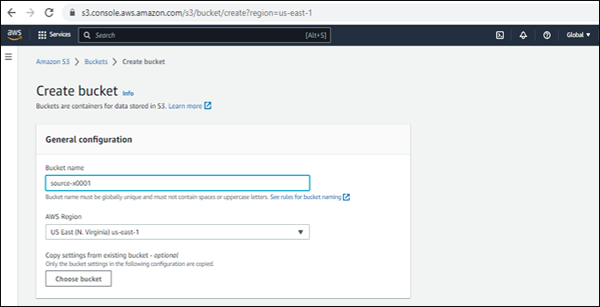

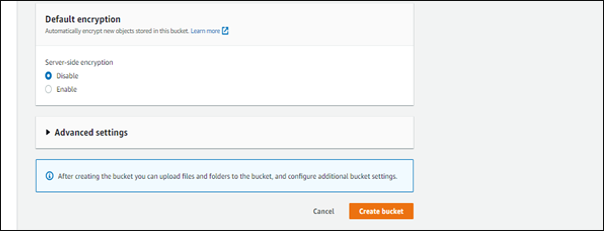

- The Create bucket page appears. Fill up the Bucket name area with a name for your bucket. Lastly, click on the Create Bucket button.

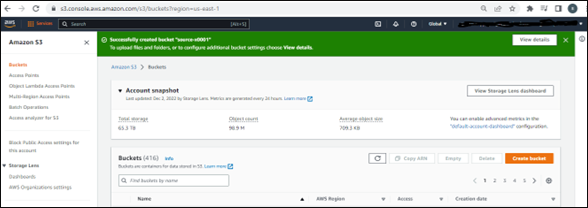

- You will get a pop up that says ‘successfully created bucket with your bucket name’, as shown in the screenshot below.

STEP – 3:

Create event notification for S3 Bucket:

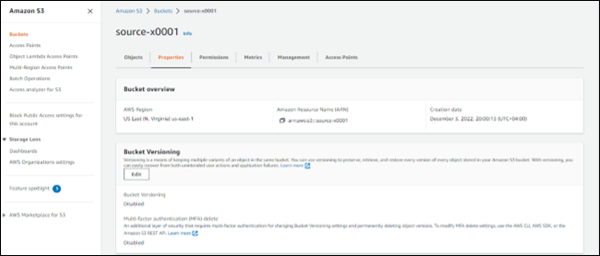

- Open the S3 Bucket that we created. Choose the Properties option. You will see the view below.

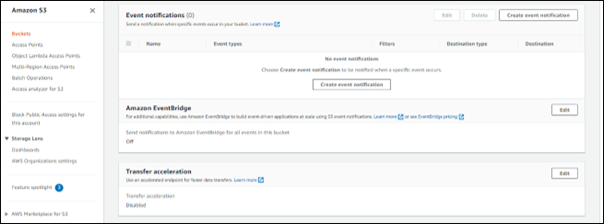

- Choose event notifications from the properties menu. We must now generate a new event notice.

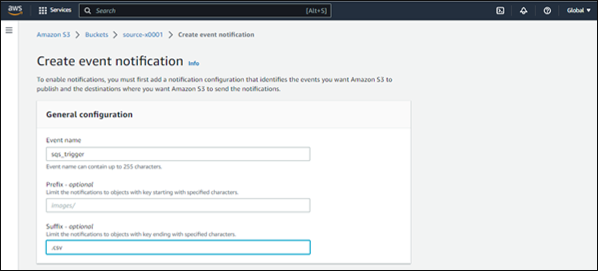

- After selecting the create event, supply the event name, prefix, and suffix for your files.

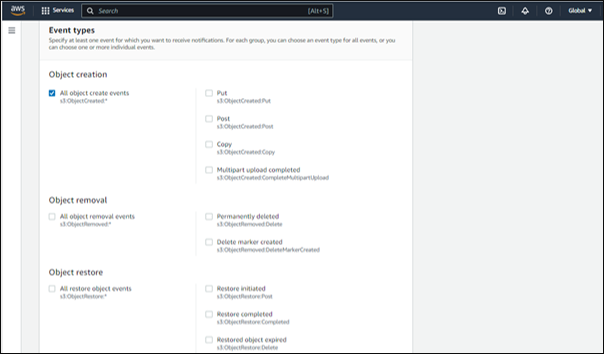

- In the event kinds,choose the option to create events for all objects. If any file is placed in the S3 bucket, the event information will be generated.

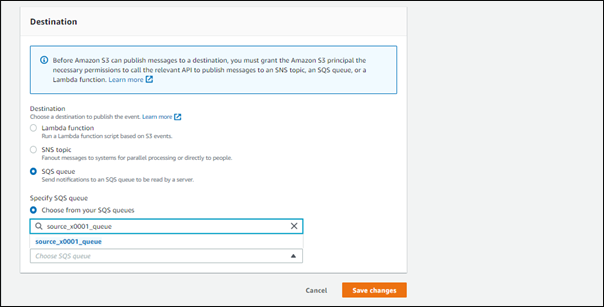

- At the destination, choose the SQS task that we created in the previous stage. Lastly, save all your modifications. Your event setup is now complete. All events will be notified to your SQS QUEUE.

- SQS Queue success pop-up!

STEP – 4:

Create Lambda Function:

Lambda Function:

Amazon Lambda is a serverless computing platform that runs your code in response to events and maintains the underlying compute resources automatically.

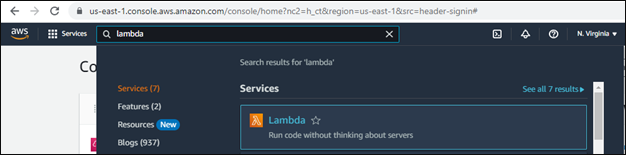

- If you search lambda in the service list, you will see the lambda service, as seen in the screenshot below.

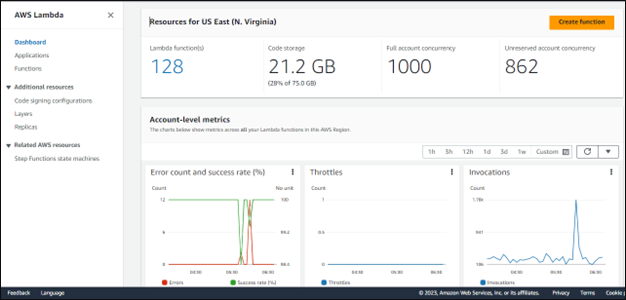

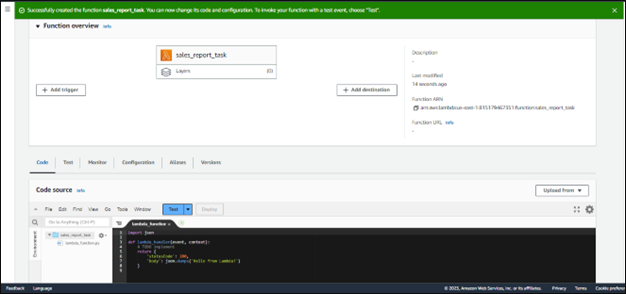

- Click on create lambda function you will see the below console

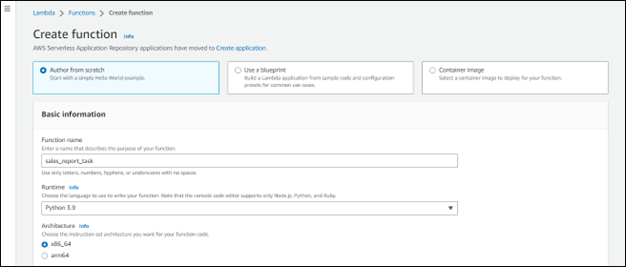

- After selecting the create function,we must create a lambda function. We’ve provided the key information, such as the function name and runtime, below. NOTE: At runtime, we are using a Python script.

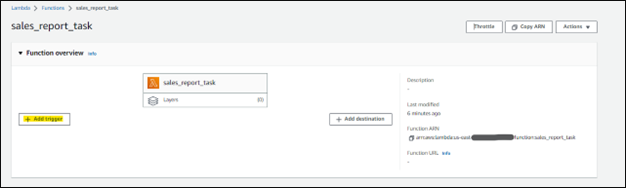

- Choose the Create Function option. Your function will be ready. The common lambda handler template is shown in the code source. There, you must build your own unique utility Python code, and you may trigger depending on events or plan time intervals.

STEP – 5:

ADD SQS trigger to the Lambda function:

- In the function overview. The add trigger button is located here. Just choose it.

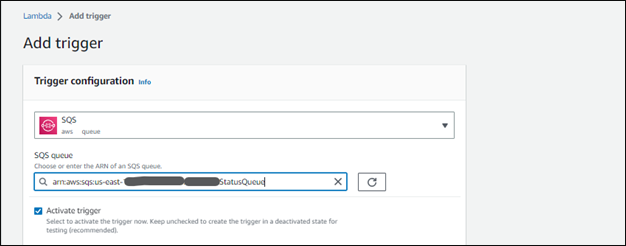

- You will be sent to the trigger configuration page. Choose the SQS service first, and then pick your Queue that you built in STEP – 1. And select add your trigger button. Your SQS trigger will be added to your Lambda function.

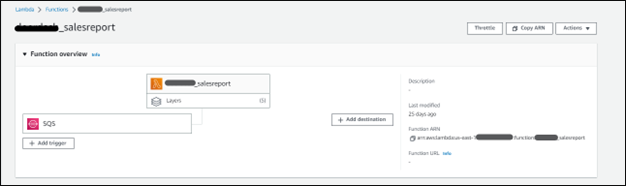

- You will see that the SQS trigger has been added to your Lambda function.

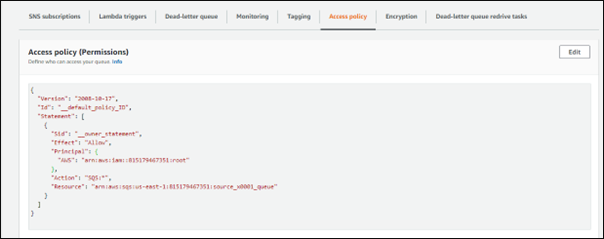

NOTE: While you are adding the SQS to the Lambda Function. You have implemented the SQS access policy. Otherwise, you will be unable to combine your Lambda with SQS.

Policy Template:

{

“Version”: “2012-10-17”,

“Id”: “example-ID”,

“Statement”: [

{

“Sid”: “example-statement-ID”,

“Effect”: “Allow”,

“Principal”: {

“Service”: “s3.amazonaws.com”

},

“Action”: [

“SQS:SendMessage”

],

“Resource”: “SQS-queue-ARN”,

“Condition”: {

“ArnLike”: {

“aws:SourceArn”: “arn:aws:s3:*:*:awsexamplebucket1”

},

“StringEquals”: {

“aws:SourceAccount”: “bucket-owner-account-id”

}

}

}

]

}

STEP – 6:

Job triggers based on S3 events using python scripts in Lambda Function:

- Before all setups, after setup. Whenever a file is put in an S3 bucket,the activities will take place at SQS. It has an event message. The Lambda function will start automatically, read the event payload from SQS, and pass it to the following specified task flow.

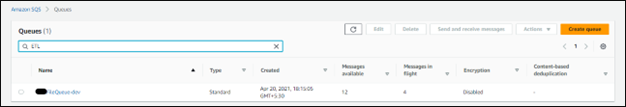

A snapshot of SQS Queues. Messages are made available based on file upload events to the S3 bucket.

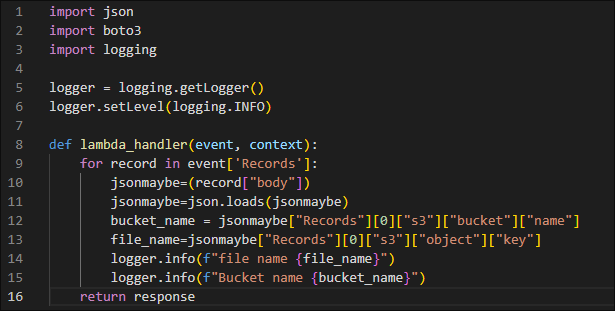

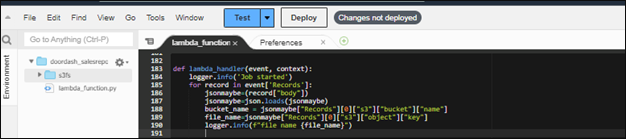

- The Python script that follows receives the SQS message and parses the bucket and file names. We may take these parameters and provide them as inputs to any jobs that do file read operations. Under the Lambda handler, you may write custom code, functions, and utilities. You can do the jobs based on the criteria.

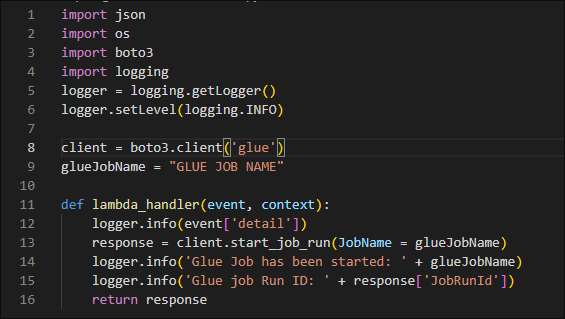

Code snippet for the Lambda function.

Launch a Glue task by using the Lambda function from a Python script using SQS events.

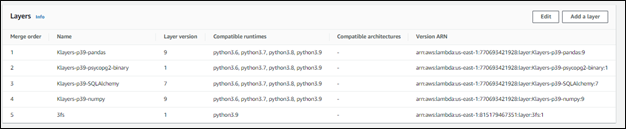

NOTE: If you want to use any other external libraries in your Lambda task, we may either add it to the AWS layers you already created, or we can ARN to the specific library seen in the picture below.

Conclusion:

In an event-driven architecture, which is common in today’s microservices-based systems, events are used to activate and communicate across disconnected services. An event is a change in status or an update, such as an item being added to an e-commerce website’s shopping basket. Events can be states such as the purchased item, its price, and a shipping address or a message that an order has been dispatched.

Author Bio:

Reddy Sreenivas

Specialist - Data Engineering

I've been with MOURI Tech for 3.7 years. Have a good history in event-driven stream data processing in Amazon Services. I am building comprehensive ETL data pipelines (Infrastructure as a Service, Platform as a Service) and have expertise in creating and delivering container architecture on Amazon and automating Big Data and Analytics deployments on containerized infrastructures.