Purpose of the article: ETL is to extract, transform, and load data from various sources into a unified format for analysis and reporting.

Intended Audience: Database Developers, Data engineers, Data analysts.

Tools and Technology: Talend, Azure Data Factory

Keywords: ETL, ELT

ETL – An Overview:

Definition and Purpose:

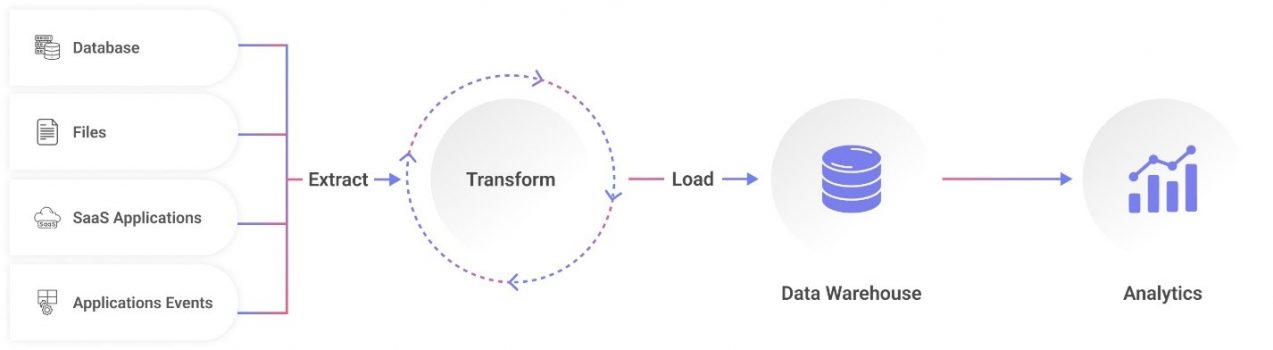

- ETL stands for Extract, Transform, Load, and its primary purpose in the realm of data management is to move data efficiently and effectively from various sources, transform it into a format that is suitable for analysis or storage, and then load it into a target destination such as a data warehouse, database, or data lake

- ETL facilitates the movement of data from diverse sources to a central repository for analysis

Key Components:

- Three main stages of ETL – Extract, Transform, and Load.

- The role of each stage in the overall data integration process.

What is ETL:

- Extract

- Transform

- Load

Extract – Gathering Insights from Sources:

Source Systems:

- The variety of sources from which data is extracted, including databases, APIs, logs, and

- Importance of understanding the structure and format of source data.

Extraction Methods:

- Different extraction methods such as full extraction, incremental extraction, and Change Data Capture (CDC).

- Considerations for choosing an extraction method based on data volume and update frequency.

Transform – Shaping Data for Analysis:

Data Cleaning:

- De-duplication:

- Eliminate duplicate records to ensure data consistency and accuracy.

- Data Standardization:

- Standardize formats (e.g., dates, addresses) for uniformity.

- Data Quality Checks:

- Implement checks to identify and address data quality issues such as outliers or anomalies.

Data Transformation:

- Data Formatting:

- Convert data types to match the requirements of the target system.

- Data Enrichment:

- Enhance the dataset by adding relevant information from external sources.

- Data Normalization:

- Adjust data values to conform to a standard scale, facilitating fair comparisons.

Load – Bringing Data Into Its Destination:

Staging Area:

- Before loading the data into the target system, it is often staged in an intermediate storage area. This staging area serves as a temporary repository for the transformed data.

- Staging allows for additional checks and validations before committing the data to the target system.

Loading Strategies:

- Bulk Loading:

- Involves loading a large volume of data in a single batch.

- Suitable for scenarios where a significant amount of data needs to be transferred.

- Incremental Loading:

- Focuses on loading only the new or modified data since the last extraction.

- Reduces the amount of data transferred, improving efficiency.

- Parallel Loading:

- Involves dividing the data into multiple subsets and loading them concurrently.

- Enhances loading performance by utilizing multiple processing resources.

- Effective for handling large datasets.

Ensuring Data Quality and Reliability:

Data Quality Checks:

- Implement robust data quality checks at various stages of the ETL process.

- Check for completeness, accuracy, consistency, and conformity to pre-defined business rules.

- Flag or handle records that do not meet quality standards.

Data Profiling:

- Before starting the ETL process, perform data profiling to understand the structure, patterns, and quality of the source data.

- Identify potential issues such as missing values, outliers, and inconsistent data formats.

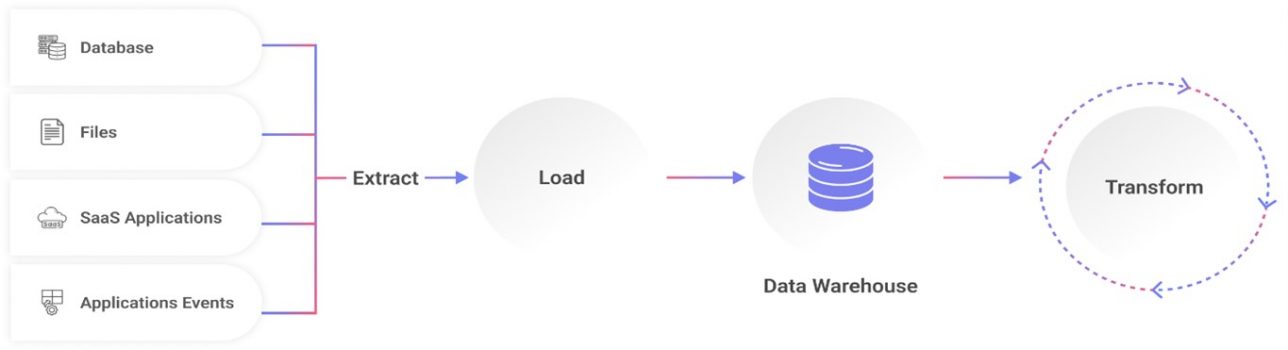

What is ELT (Extract, Load, Transform):

ELT is a data integration process where data is first Extracted from source systems, loaded into a target data warehouse, and then Transformed within the data warehouse itself. The transformation takes place after the data has been loaded into the destination system.

- ETL VS ELT:

| Method | Description | Data Transformation Location |

| ETL | In ETL, data transformation occurs in a separate processing environment or staging area before the data is loaded into the data warehouse. The transformed data is then loaded into the warehouse for storage and analysis. | Separate processing environment or staging area |

| ELT | In ELT, the data transformation happens within the data warehouse itself. The data is extracted from source systems, loaded into the data warehouse, and then transformed using the processing capabilities of the data warehouse. | Within the data warehouse |

- ETL Tools

- List of On-Premises ETL (Extract, Transform, Load) Tools:

Tool | Free/Open-Source | Commercial |

Apache NiFi | Yes | No |

Talend | Yes | Yes |

Informatica PowerCenter | No | Yes |

Microsoft SSIS | Yes (with SQL Server license) | No |

IBM Infosphere DataStage | No | Yes |

Oracle Data Integrator (ODI) | No | Yes |

SAP Data Services | No | Yes |

- List of Cloud-based ETL (Extract, Transform, Load) Tools:

Tool | Free Tier/Community Edition | Commercial |

AWS Glue (Amazon Web Services) | Yes (Free Tier) | Yes |

Azure Data Factory (Microsoft Azure) | Yes (Limited Free Tier) | Yes |

Google Cloud Dataflow (GCP) | No | Yes |

SnapLogic | No | Yes |

Talend Cloud | Yes (Limited Free Tier) | Yes |

Stitch Data | Yes (Limited Free Tier) | Yes |

Databricks (Azure Databricks) | No | Yes |

Conclusion

The goal of this blog is to ensure effective data administration. The three primary steps—extraction, transformation, and loading (ETL) —were discussed in this blog post, emphasizing their importance in preserving the accessibility and quality of data. Professionals that master ETL are better equipped to optimize operations, make wise judgements, and extract insightful information from data.

Author Bio:

Shefali Sanjay Mehta

Digital Transformation - Associate Software Engineer

Database development is my professional strength. I enjoy gaining knowledge about new technology.