Purpose of the article: Branding

Intended Audience: Public

Tools and Technology: Azure Services

Keywords: Parsing Complex XML/NAXML, Azure Dataflow, complex xml with azure data factory.

Description: Data may be in any kind of source system and different file formats such as CSV, Excel, Json, XML, etc. There are requirements to parse complex XML/NAXML files and load required data fields into target systems.

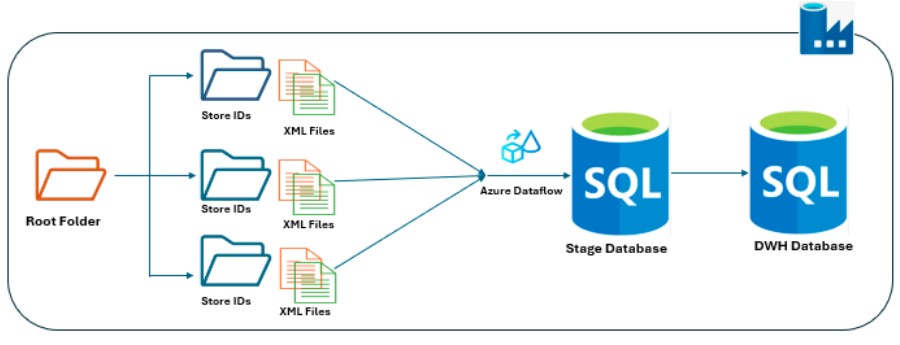

Flow Diagram:

Business Requirement:

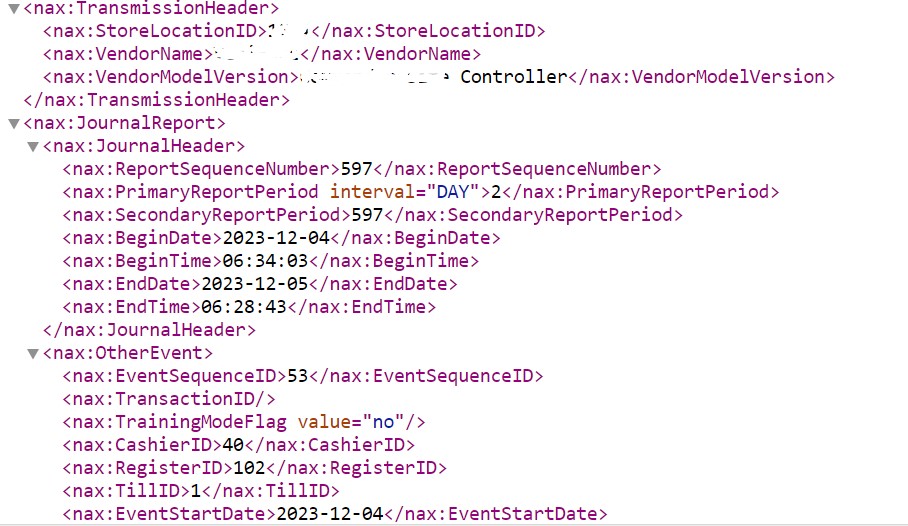

Source system is complex XML/NAXML files which contain multiple nodes and there are some nodes repeated within the parent node, which contains different data types like string, number, and flags and Boolean as well.

The NAXML is a file format that contains tags with <NAXML> and </NAXML> unlike regular <XML> tags. File formats are mentioned below.

These XML/NAXML files will be placed in an on-prem file system, with two different folder structures, one for XML files and another for NZXML files.

As mentioned in the above flow diagram these XML/NAXML files to be pulled from nested folder structure.

Folder structure looks like the following:

Date folder (date wise)

storeIds (250 folders)

XML/NAXML files.

Development Approach:

Step 1: ADF reads files from nested sub-folders of on-prem file system copy to two different ADLS Gen2 containers for XML and NAXML files.

Because dataflow will connect only azure related services like Azure SQL Database, Azure Blob Storage, and Azure Data Lake Gen2.

Step 2: To read files from nested ADLS, Gen2 sub-folders need to use foreach activities multiple times. And foreach within the foreach (in the form of execute pipeline).

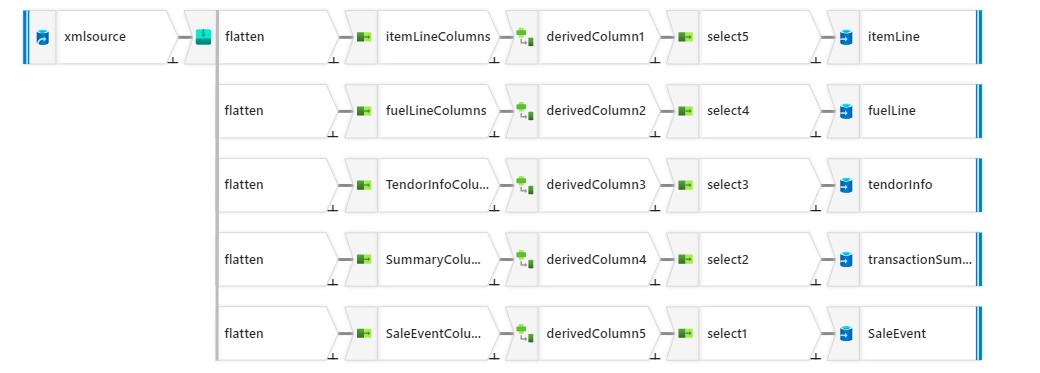

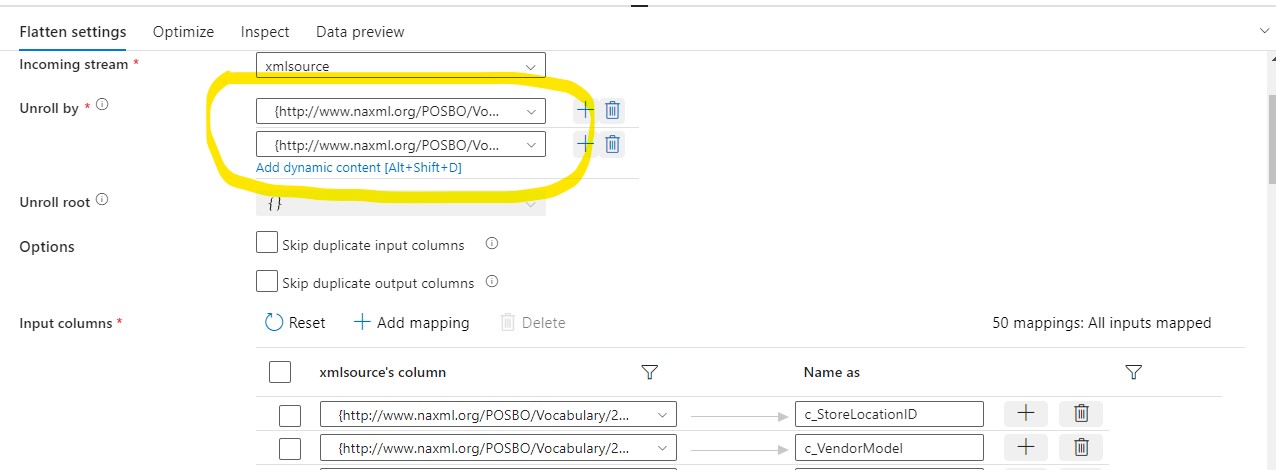

Step 3: Read the XML/NAXML file into dataflow, use flatten transformation and appropriate unroll nodes. It is better to unroll the file using parent node so that repeated data nodes are loaded into database tables, otherwise there will be a data loss.

From the following image, we can understand the flatten approach by root node to get all the child nodes without data loss.

Step 4: Separate the flattened fields into different tables using select transformation and then use derived column and again select transformations to properly identify the columns related to each table.

Step 5: Connect each related flow of data fields to separate sink transformation to different tables.

Here, in this approach, as the files are in nested folders in ADLS Gen2, to capture the whole path and pass to the dataflow activity, concatenate the entire path and pass as a parameter so that dataflow will be able to read files from ADLS Gen2 containers.

Since we are processing two different file formats, XML and NAXML need to parameterize the pipeline to pick the related files up from the ADLS Gen2 to file path based on the given parameter. If we pass XML as a parameter, it will pick up only XML files; otherwise, it will pickup NAXML files when we pass NAXML as a parameter.

Automation:

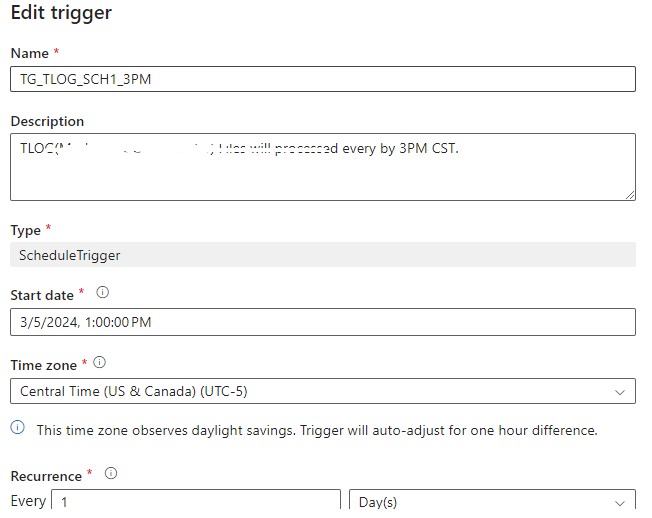

The whole approach from reading files from on-prem to loading data into Azure SQL database can be automated using triggers in ADF. We can set up two triggers, one for XML data and another for NAXML data with parameter at trigger level.

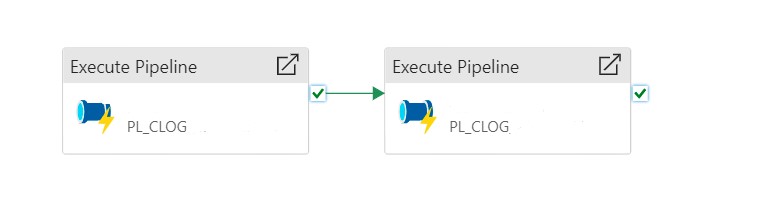

Alternatively, in a new pipeline we can add two execute pipelines and pass the individual parameters at pipeline level to connect those activities one after another, so that the file formats XML and NAXML will be picked up sequentially.

Add a trigger to the new pipeline, which calls two execute pipelines as below.

Conclusion:

To accomplish this business requirement, we need to parameterize the whole pipeline and pass the parameters to the internal child pipelines. To handle multiple foreach functionality, we will call the execute pipeline activities in the foreach activity and pass the required parameters. Eventually dataflow will be able to read the XML and NAXML files, flatten those files and load data into target database.

References:

- https://learn.microsoft.com/en-us/azure/data-factory/control-flow-execute-data-flow-activity

- https://learn.microsoft.com/en-us/azure/data-factory/data-flow-derived-column

- https://learn.microsoft.com/en-us/azure/data-factory/data-flow-select

- https://learn.microsoft.com/en-us/azure/data-factory/tutorial-data-flow-dynamic-columns

- https://learn.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-introduction

Author Bio:

Syam Kumar ANGALAKURTHI

Advanced Analytics - Technical Architect

Syam Kumar ANGALAKURTHI has 10Yrs of IT experience in ETL and Data warehousing solutions. Experience in Azure cloud services, with different industries like Oil & Gas, Banking, Finance and Healthcare