Purpose of the article: This blog post will guide you through the process of fetching data from an API and seamlessly loading it into a PostgreSQL database using Python.

Intended Audience: Data engineers, python developers, full-stack developers

Tools and Technology: Python, PostgreSQL

Keywords: APIs (Application Programming Interfaces) , SQL alchemy, DataFrame , Normalization ,JSON data.

In today’s interconnected world, the ability to retrieve and analyze data from various sources is crucial for informed decision-making. This blog post will guide you through the process of fetching data from an API and seamlessly loading it into a PostgreSQL database using Python.

Introduction

APIs (Application Programming Interfaces) provide a structured way for different software applications to communicate with each other. In this tutorial, we’ll focus on using the API layer to obtain currency exchange rate data. Once we retrieve the data, we’ll leverage Python’s Pandas library to process it and subsequently store it in a PostgreSQL database.

What is PostgreSQL?

PostgreSQL is an open-source database management system. It has many functions that are properly saved and scaled at very complex facts workloads. It is free and open source, and supports many languages like C/C++, Python, Java etc.

What is Python?

Python is a high-level, general-purpose, and interpreted programming language utilized in numerous sectors inclusive of system learning, facts engineering, synthetic intelligence, facts analysis, internet development, and lots more. Python is popular for its ease of use, effective fashionable library, and dynamic semantics. The major focus is making it easier for developers to read and understand, thereby also reducing the lines of code.

About Used API Data

Currency Data API provides a simple REST API with real-time and historical exchange rates for 168 world currencies, delivering currency pairs in universally usable JSON format – compatible with any of your applications.

Pls check this. Is it right?

Prerequisites

Before we begin, ensure you’ve got the important packages installed:

- Python

- Postgres Database

- API URL, API key

- Requests library (pip install requests)

- Pandas’ library (pip install pandas)

- SQL Alchemy library (pip install SQL alchemy)

Additionally, you’ll need access to a PostgreSQL database. If you don’t have one set up, you can install PostgreSQL and create a database using tools such as PgAdmin.

Overview of the POC

Here we are fetching the data from the Currency Data API by passing the API URL and API token through Python, which is getting data in JSON format, and it fetches currency exchange rate data from the API layer , processes it into a Data Frame, normalizes numeric columns, calculates an average rate, and then stores the data in a PostgreSQL database.

The Code

Let’s dive into the Python code that performs data retrieval, processing, and database storage.

- Import the Necessary Libraries:

- requests: for making HTTP requests to the API.

- pandas: for handling data in Data Frame format.

- create an engine from SQL Alchemy: for creating a database connection engine.

After importing libraries, give API details for reading the data and parameters for data.

- API Request & Execution:

Here, it executes the API request using requests. Get (). It handles potential exceptions and prints the status code if the request is successful. It also parses the JSON response into a Python dictionary (exchange_rate_data).

- Data Processing:

This section checks if exchange_rate_data exists. If it does, it extracts the exchange rate information (quotes) from the JSON response and processes it. It creates a list of dictionaries (data_list), with each dictionary representing currency exchange rate data for a specific currency.

- DataFrame Creation & Normalization:

Here, it converts the list of dictionaries (data_list) into a Data Frame (df). It then normalizes the numeric columns (Start_Rate, End_Rate, Change, Change_Pct) by scaling them between 0 and 1. It also calculates the average rate for each currency.

- Database Connection and Data Insertion:

This part establishes a connection to the PostgreSQL database using SQL Alchemy’s create_engine. It specifies the table name (table_name) and tries to insert the Data Frame (df) into the database table. If an error occurs during data insertion, it prints an error message. Finally, it closes the database connection using the engine. Dispose ().

# 1. Import necessary libraries

import requests

import pandas as pd

from sqlalchemy import create_engine

# 2. API and database credentials

url = "https://api.apilayer.com/currency_data/change?latest"

params = { "start_date": "2023-09-27", "end_date": "2023-09-28",

"base": "USD"

}

headers = {"apikey": "Give Your API key here"}

# API request

response = requests.get(url, params=params, headers=headers)# Check if the API request become successful

if response.status_code == 200:

print("Connected")

print("Successfully fetched the data")

# Process the API response

exchange_rate_data = response.json()

quotes = exchange_rate_data['quotes']

# 3. Prepare data for DataFrame

data_list = []

start_date = params['start_date']

end_date = params['end_date']

for currency, values in quotes.items():

data_list.append({

'Currency': currency,

'Start_Rate': values['start_rate'],

'End_Rate': values['end_rate'],

'Change': values['change'],

'Change_Pct': values['change_pct'],

'Start_Date': start_date,

'End_Date': end_date

})

# Create DataFrame

df = pd.DataFrame(data_list)

# 4. Normalize numeric columns

numeric_cols = ['Start_Rate', 'End_Rate', 'Change', 'Change_Pct']

df[numeric_cols] = (df[numeric_cols] - df[numeric_cols].min()) / (df[numeric_cols].max() - df[numeric_cols].min())

df['Average_rate'] = (df['Start_Rate'] + df['End_Rate']) / 2

# Display the normalized DataFrame

print(df)

# 5. Database connection

username = 'Your user name'

password = 'your password'

host = 'your host address'

database_name = 'your db name'

conn = f'postgresql://{username}:{password}@{host}/{database_name}'

engine = create_engine(conn)

# Load data into the PostgreSQL DB

table_name = 'api_test'

try:

# Load the DataFrame data into the PostgreSQL DB

df.to_sql(table_name, engine, if_exists='replace', index=False)

print('Table created ')

except Exception as e:

print('Error loading data to database', e)

finally:

# Close the database connection

engine.dispose()

else:

print(f"Error: {response.status_code}")

print(response.text)

Output:

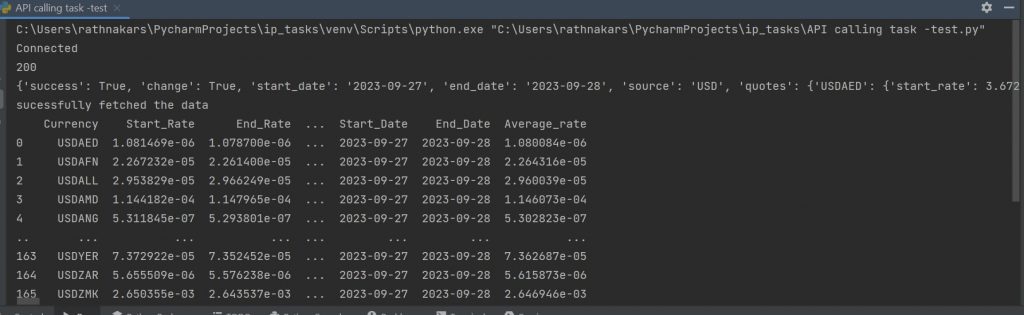

- The below screenshot output from PyCharm shows up after a successful connection establishment. We got a response status code 200; after a successful connection, it gives the JSON data and then we convert tabular format data.

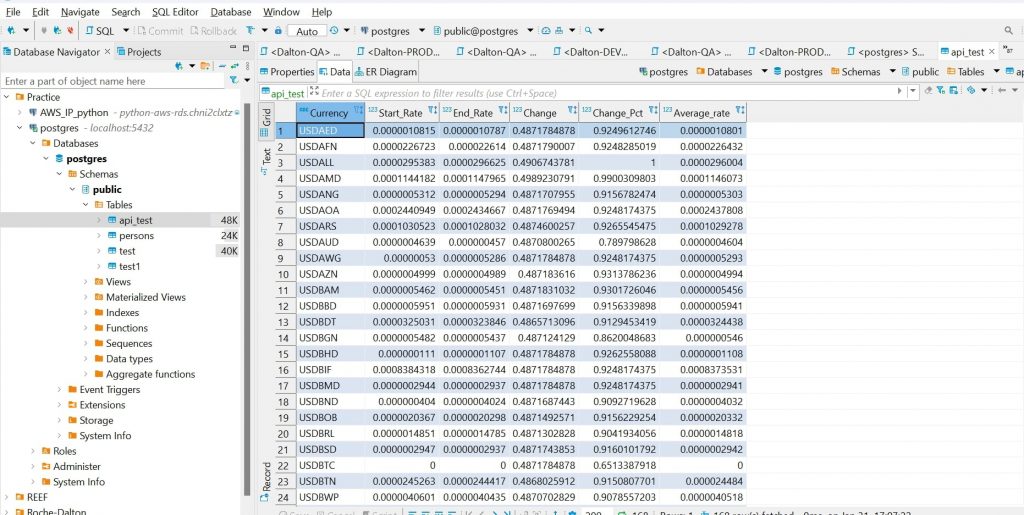

- The below screenshot gives the DB output, which is the final output of the data.

Conclusion

After following this blog’s POC, you have successfully implemented a Python script to fetch data from an API, process it, and store it in a PostgreSQL database. This capability opens the door to a variety of use cases, allowing you to automate data retrieval and analysis tasks effectively. By utilizing Python’s data manipulation strengths and PostgreSQL’s reliable data storage, you have crafted a scalable solution capable of handling large volumes of data and adjusting to evolving business needs. This achievement underscores your skill in leveraging technology to simplify processes and enhance efficiency in data-driven decision-making.

References:

- https://www.askpython.com/python/examples/pull-data-from-an-api

- https://apilayer.com/marketplace/currency_data-api

- https://python.plainenglish.io/importing-csv-data-into-postgresql-using-python-aee6b5b11816

- https://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.DataFrame.html

- https://www.commandprompt.com/education/how-to-connect-to-postgresql-database-server-using-python/

- https://www.geeksforgeeks.org/how-to-append-a-list-as-a-row-to-a-pandas-dataframe-in-python/

Author Bio:

Rathnakar Reddy SAGILI

Associate Software Engineer - Data Engineering-Analytics

I am Rathnakar Reddy SAGILI working as Associate Software Engineer at MOURI Tech from the past 2.7 years. My Areas of expertise include SQL, PostgreSQL, Python and I have knowledge on AWS S3,AWS RDS, AWS Glue, AWS IAM, AWS lambda Function and Alteryx tool.