- Purpose of the Article: Branding Capabilities

- Intended Audience: Public

- Tools and Technology: Workato

- Keywords: SAP SLT, SAP CDC connector, Azure SAP CDC connector

In Part-1 we have configured azure data factory data flow activity and given all related information.

What is ODP Context?

ODP is a framework in SAP ABAP applications for transferring data between systems.

ODP provides a technical infrastructure for data extraction and replication from different SAP (ABAP)

Systems e.g.:

ECC, S/4 HANA, BW, BW/4 HANA

The extract ODP component acts as a subscriber (consumer) and subscribes to a data provider, for example to an SAP Extractor or to a CDS View.

Operational data provisioning supports mechanisms to load data incrementally, e.g., from extractors, ABAP CDS Views and a DSO objects (see below). With SAP BW/4HANA, Operational Data Provisioning (ODP) becomes the central infrastructure for data extraction and replication from SAP (ABAP) applications to an SAP BW/4HANA Data Warehouse.

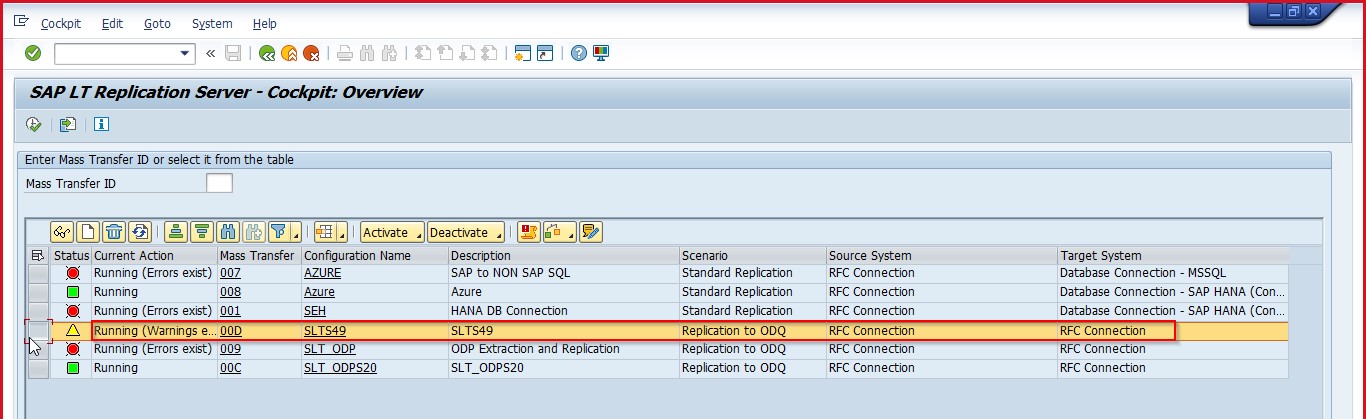

SLT Replication Server (ECC) setup:

Login into the ECC replication server and enter the LTRC – transaction code to setup the replication process.

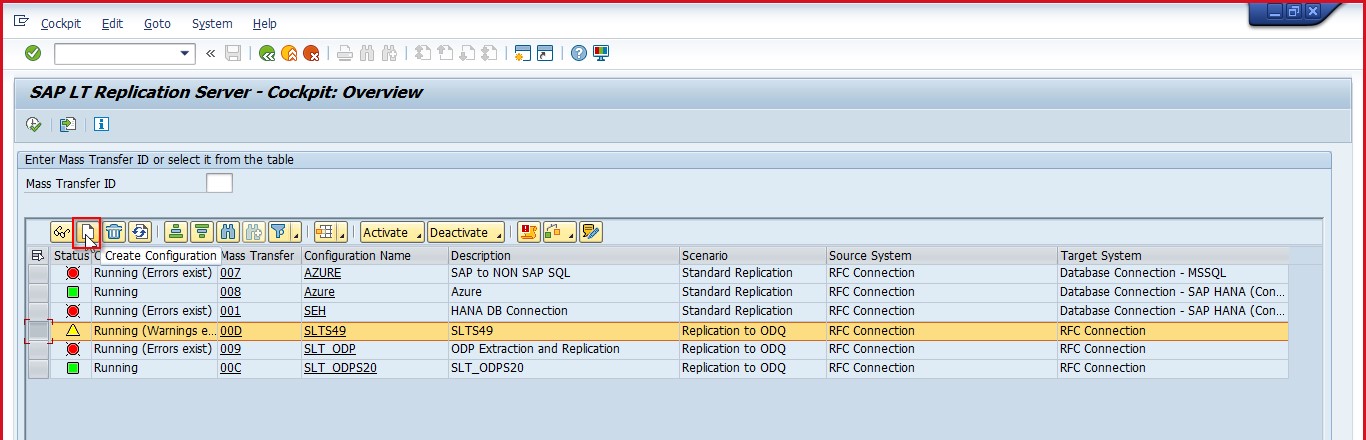

Navigated into replication server cockpit page.

Click on a create button and then we can get a configuration wizard run.

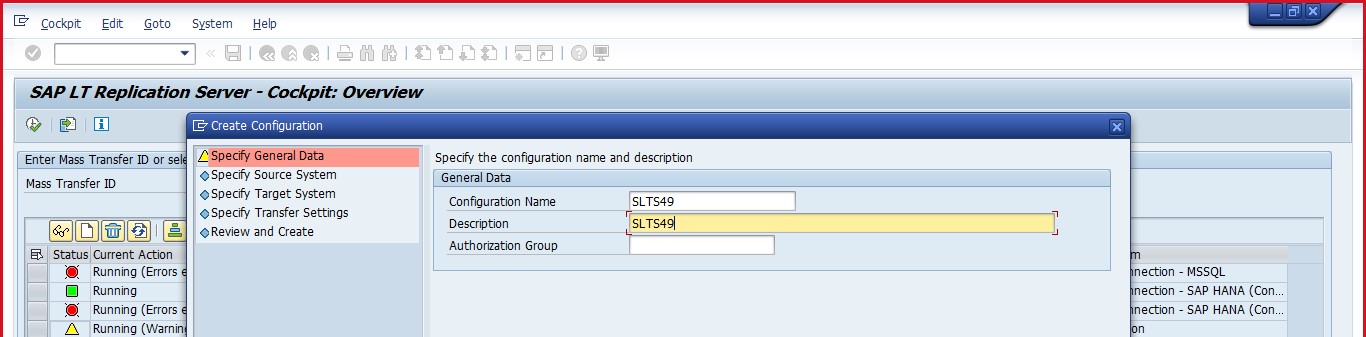

Enter the appropriate name and click on continue.

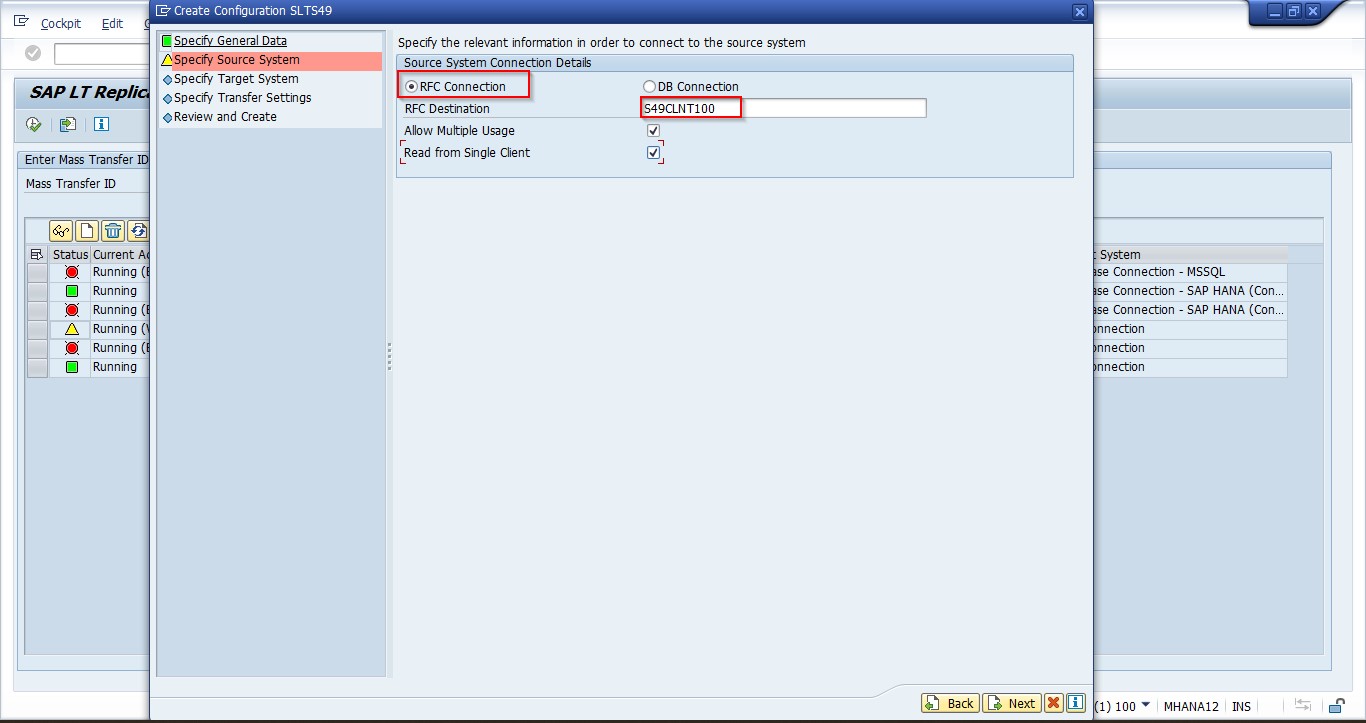

Select option RFC connection and enter source system destination connection.

Select Read from single client option based on the requirement(optional).

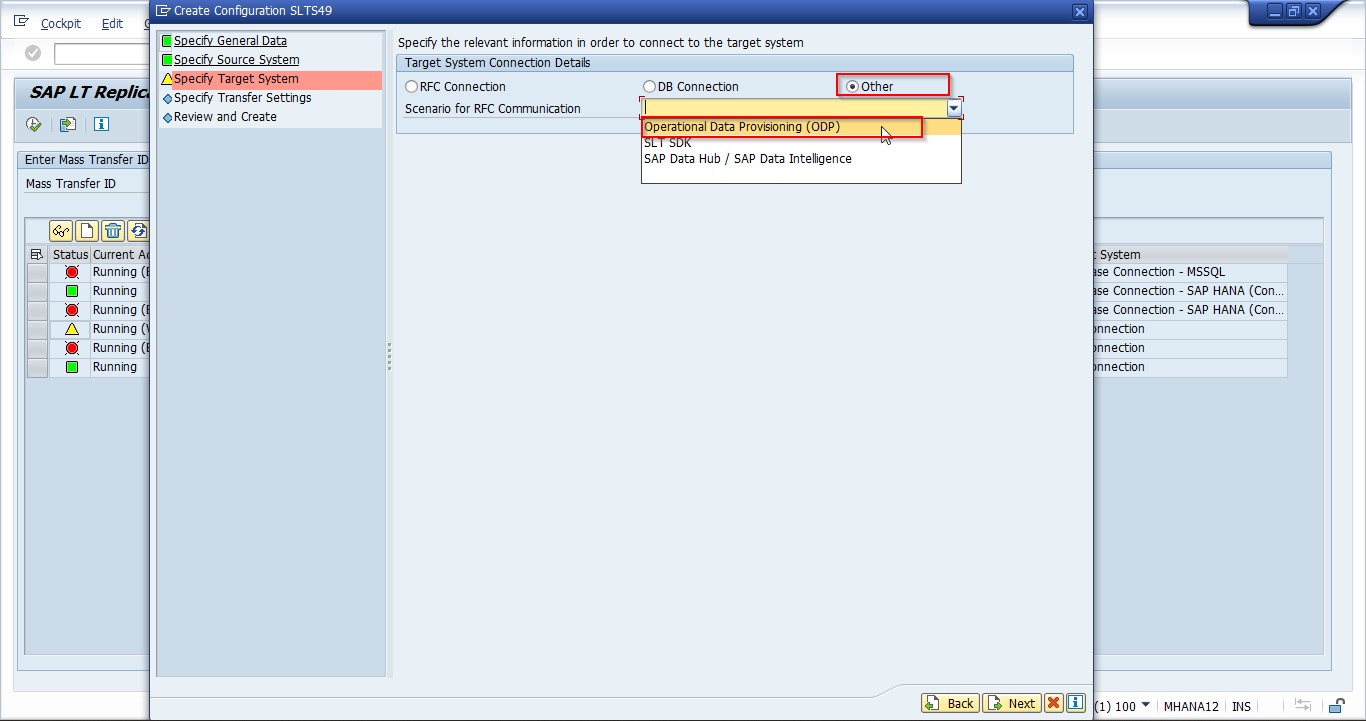

Choose “other” option and select the “Operational Data Provisioning (ODP)” option and click on Next.

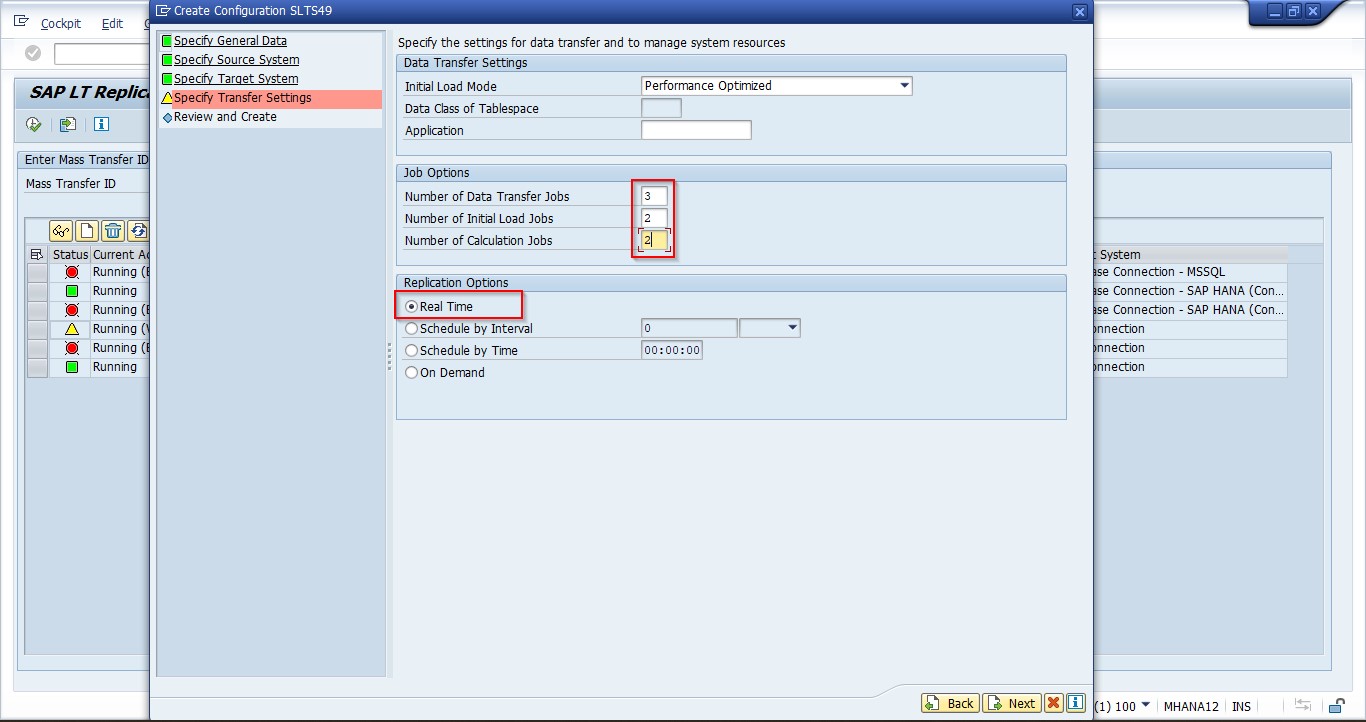

Enter parallel run jobs as shown in the above screenshot (It can be varying on the requirement) and choose replication options type, here in the case we have chosen “Real Time”.

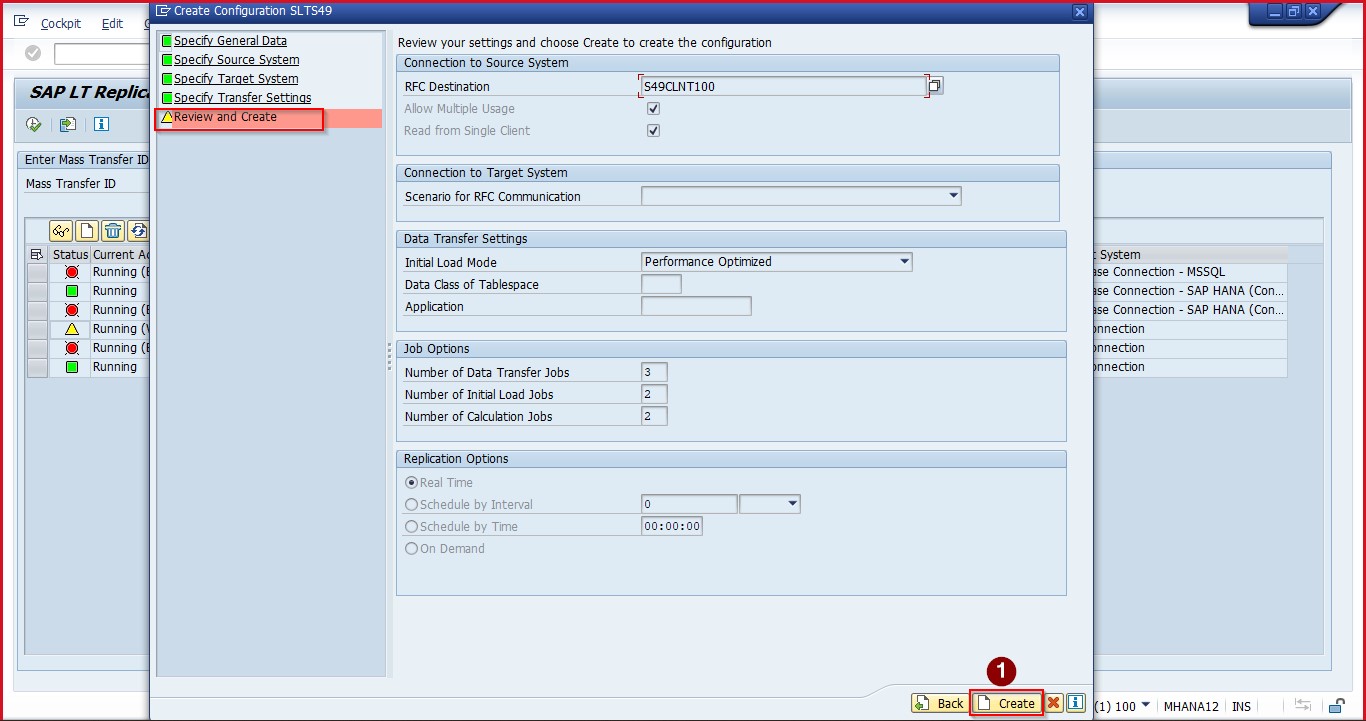

Review all the given parameters and click on create button to complete the SAP SLT replication setup.

Till now we have seen how to configure SAP replication system.

Authorization Access in SAP:

User should have below authorization access in both source and replication systems.

SAP_IUUC_REPL_ADMIN

SAP_IUUC_REPL_REMOTE

SAP_IUUC_REPL_DISPLAY

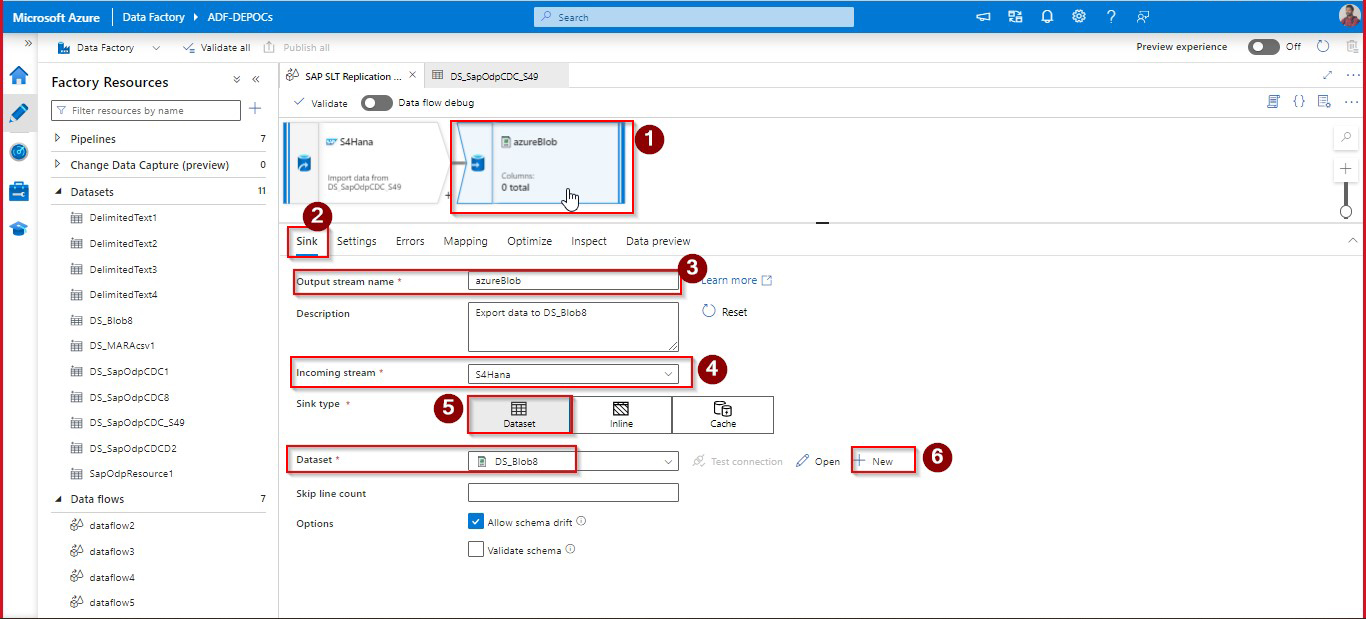

After setting up the replication server in SAP, Sink (Target) system AZURE Blob Storage has been added.

Sink can be added by creating new dataset on AZURE Blob storage through number 6.

Incoming stream should be selected – that is our source.

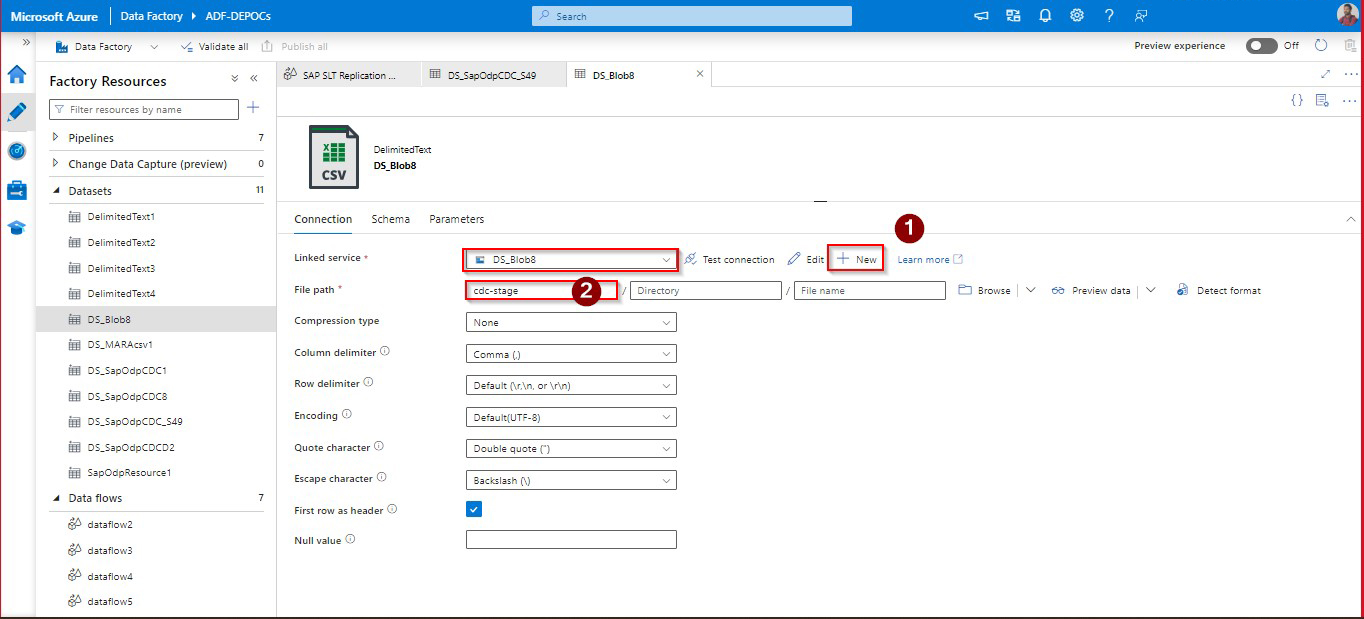

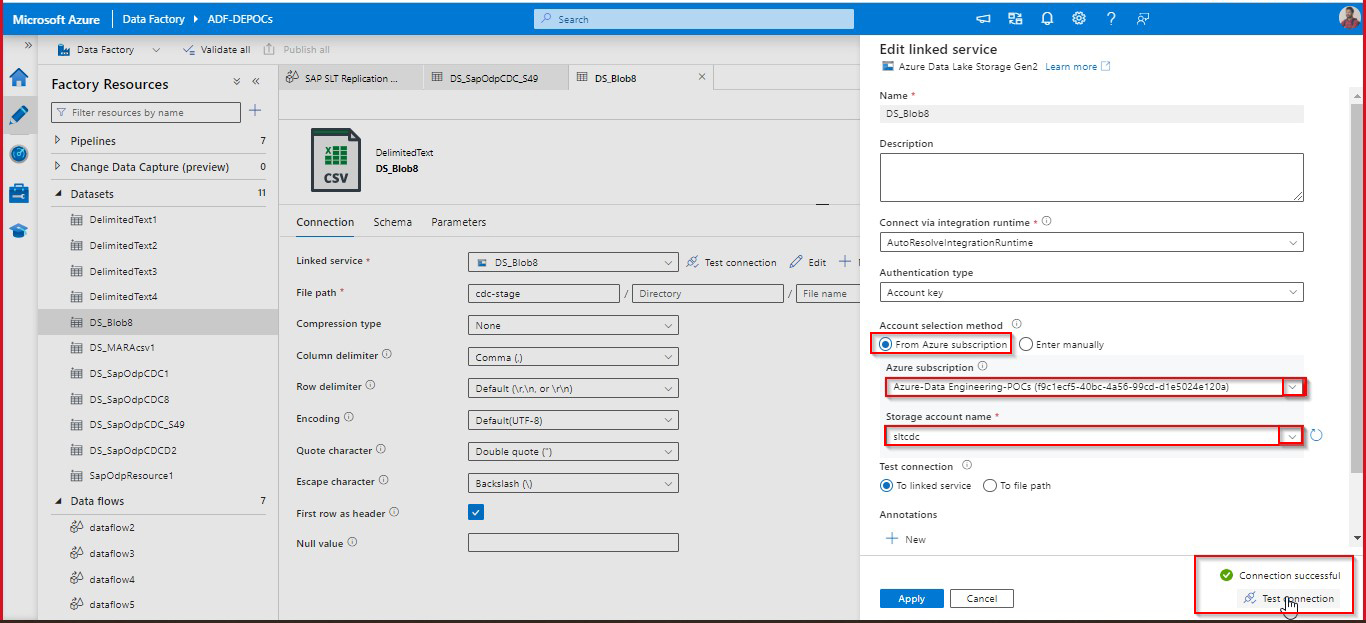

Linked service can be created navigating through under corresponding folder, under fille path can be selected. Linked service setup has been given below.

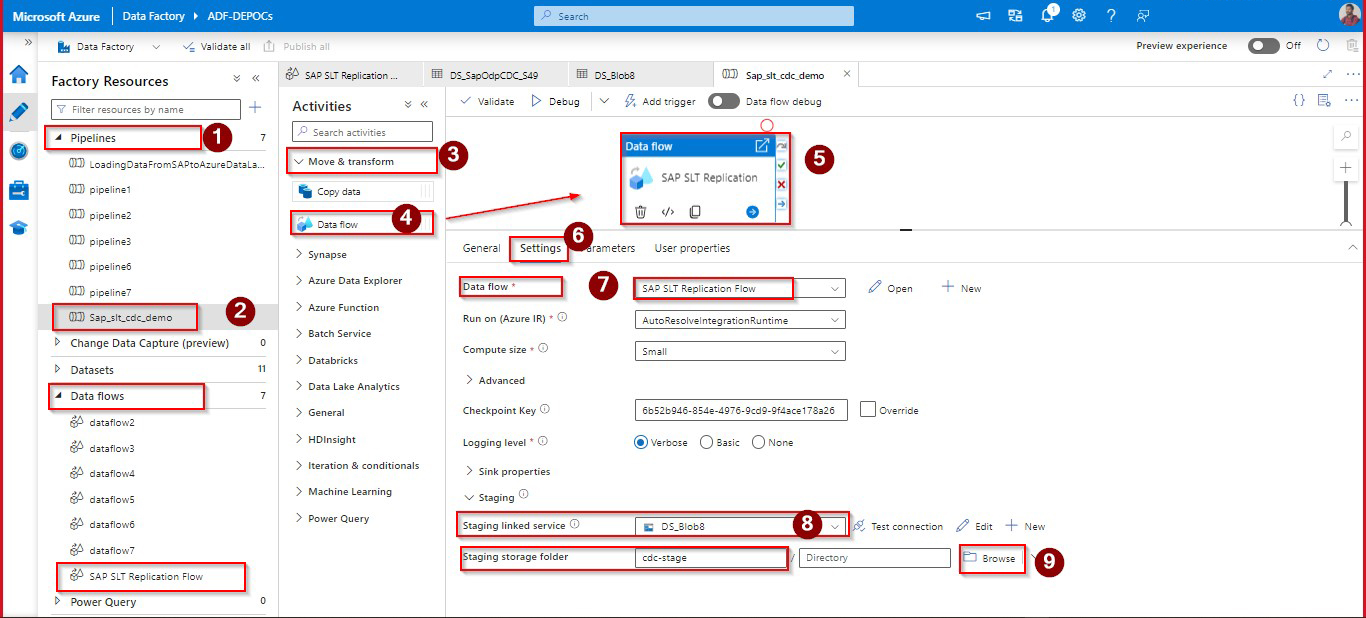

After setting up linked service successfully, we need to call this dataflow into pipeline.

Create a pipeline with the appropriate name and drag dataflow activity into canvas, call already created dataflow into this pipeline as mentioned in 7th point.

Provide staging storage account and container. After all these settings done, pipeline executed.

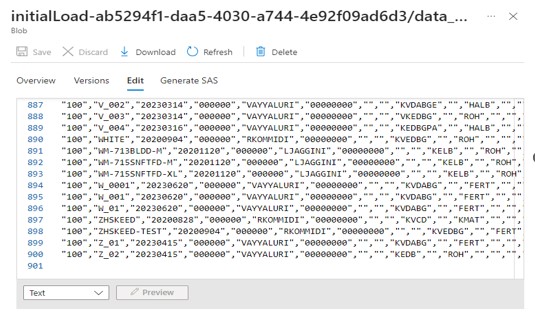

As per the given logic, pipeline has loaded full data from the source of a given table (MARA) for the first run.

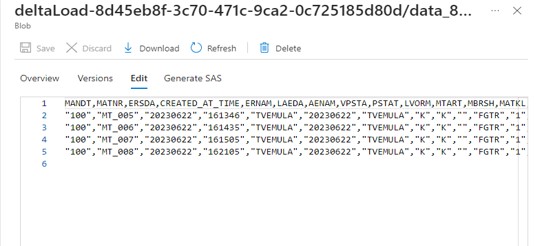

On second run delta data (CDC data) has been captured and loaded into Blob storage.

On third run another set of CDC data has been loaded into Blob storage.

Successfully loaded SAP S/4 HANA SLT replication data into Azure Blob Storage using Azure data flow CDC connector.

References:

https://learn.microsoft.com/en-us/azure/data-factory/sap-change-data-capture-debug-shir-logs

https://learn.microsoft.com/en-us/azure/data-factory/sap-change-data-capture-management

Author Bio:

Syam Kumar ANGALAKURTHI

Team Lead - Analytics

7 Yrs. of Extensive experience in Azure Data integration & Application integrations. Worked in different industries like Retail, HealthCare, Oil & Natural Gas, Manufacturing and Banking Finance.